Greetings, everyone!

I’m having trouble with loading custom datasets into PyTorch Forecasting. I already posted the question to Stack Overflow but it seems that I might find the answer here here’s the message pasted for your convenience:

I’m trying to load a custom dataset to PyTorch Forecasting by modifying the example given in this Github repository. However I’m stuck at instantiating the TimeSeriesDataSet. The relevant parts of the code are as follows:

import numpy as np

import pandas as pd

df = pd.read_csv("data.csv")

print(df.shape) # (300, 8)

# Divide the timestamps so that they are incremented by one each row.

df["unix"] = df["unix"].apply(lambda n: int(n / 86400))

# Set "unix" as the index

#df = df.set_index("unix")

# Add *integer* indices.

df["index"] = np.arange(300)

df = df.set_index("index")

# Add group column.

df["group"] = np.repeat(np.arange(30), 10)

from pytorch_forecasting import TimeSeriesDataSet

target = ["foo", "bar", "baz"]

# Create the dataset from the pandas dataframe

dataset = TimeSeriesDataSet(

df,

group_ids = ["group"],

target = target,

time_idx = "unix",

min_encoder_length = 50,

max_encoder_length = 50,

min_prediction_length = 20,

max_prediction_length = 20,

time_varying_unknown_reals = target,

allow_missing_timesteps = True

)

And the error message plus traceback:

/home/user/.virtualenvs/torch/lib/python3.9/site-packages/pytorch_forecasting/data/timeseries.py:1241: UserWarning: Min encoder length and/or min_prediction_idx and/or min prediction length and/or lags are too large for 30 series/groups which therefore are not present in the dataset index. This means no predictions can be made for those series. First 10 removed groups: [{'__group_id__group': 0}, {'__group_id__group': 1}, {'__group_id__group': 2}, {'__group_id__group': 3}, {'__group_id__group': 4}, {'__group_id__group': 5}, {'__group_id__group': 6}, {'__group_id__group': 7}, {'__group_id__group': 8}, {'__group_id__group': 9}]

warnings.warn(

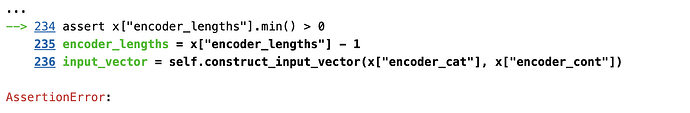

---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

/tmp/ipykernel_822/3402560775.py in <module>

4

5 # create the dataset from the pandas dataframe

----> 6 dataset = TimeSeriesDataSet(

7 df,

8 group_ids = ["group"],

~/.virtualenvs/torch/lib/python3.9/site-packages/pytorch_forecasting/data/timeseries.py in __init__(self, data, time_idx, target, group_ids, weight, max_encoder_length, min_encoder_length, min_prediction_idx, min_prediction_length, max_prediction_length, static_categoricals, static_reals, time_varying_known_categoricals, time_varying_known_reals, time_varying_unknown_categoricals, time_varying_unknown_reals, variable_groups, constant_fill_strategy, allow_missing_timesteps, lags, add_relative_time_idx, add_target_scales, add_encoder_length, target_normalizer, categorical_encoders, scalers, randomize_length, predict_mode)

437

438 # create index

--> 439 self.index = self._construct_index(data, predict_mode=predict_mode)

440

441 # convert to torch tensor for high performance data loading later

~/.virtualenvs/torch/lib/python3.9/site-packages/pytorch_forecasting/data/timeseries.py in _construct_index(self, data, predict_mode)

1247 UserWarning,

1248 )

-> 1249 assert (

1250 len(df_index) > 0

1251 ), "filters should not remove entries all entries - check encoder/decoder lengths and lags"

AssertionError: filters should not remove entries all entries - check encoder/decoder lengths and lags

I have tried tweaking the initializer arguments without success. The file timeseries.py can be found in the same Github repository, at pytorch_forecasting/data/timeseries.py, line 1246. Cannot give a third link in a single post, unfortunately.