I am trying to extract activation vectors from some spotlighted layers using hook.

Referring to the usage from the forum, I applied this hook function to my resnet50 model (trained with dataparallel, multi-GPUs).

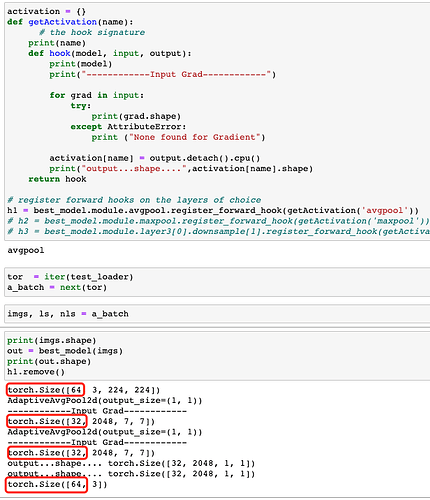

While I am sending batched test images to the network and expecting to get batched feature vectors, I am surprised that the size of the activations is not the same as my batch size. I use 64 images and the output logits form the network is also 64. But the activation tensor is only 32.

I don’t know what made this failure. Please help me out.