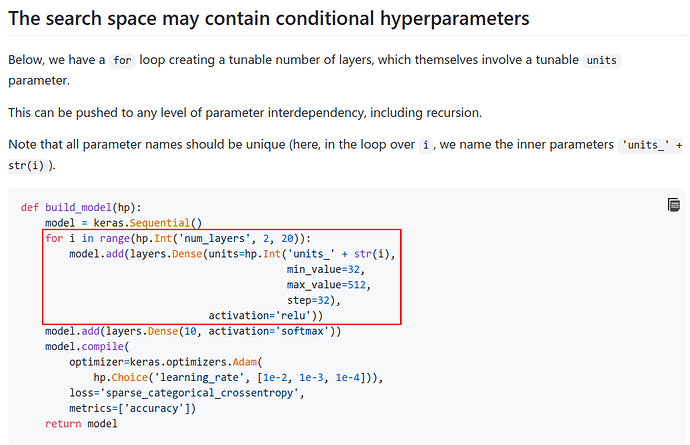

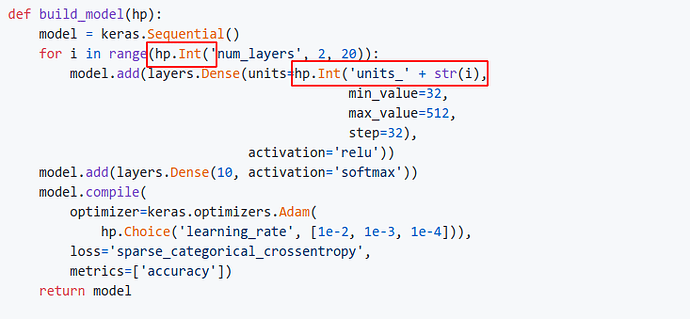

kerastuner provides the following feature. Is there any hyper parameter tuning library that pytorch can use also provides this feature?

TPE algorithm seems to work better for that, so I’d suggest optuna as a more recent alternative to hyperopt, where it first appeared.

After I read optuna’s documentation, I found it indeed has this feature!

Thanks very much!