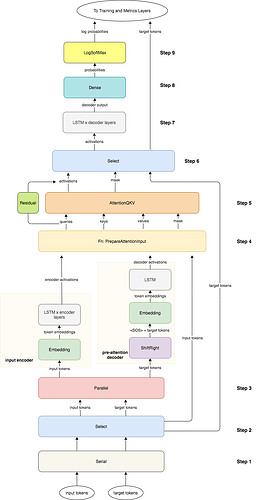

Hello can you tell me how can I implement this model in pytorch ? I already implemented some models like this before but without attention and now I want to implement this in pytorch . I have several questions and problems but mostly with how can I handle the data flow to both Encoder and the pre-attention decoder .