when i used dataparell ,i meet :\anaconda3\lib\site-packages\torch\cuda\nccl.py:16: UserWarning: PyTorch is not compiled with NCCL support warnings.warn(‘PyTorch is not compiled with NCCL support’)

But I used to use it normally ,when i update torch1.5-torch1.7,the question is coming. why?

my code is

if t.cuda.device_count() > 1:

model = nn.DataParallel(model)

if opt.use_gpu: model.cuda()

i meet the answer :Win10+PyTorch+DataParallel got warning:"PyTorch is not compiled with NCCL support"

i want to konw why torch 1.5.1 can be used dataparallel ,but 1.7.0 doesnt.

could someone answer this question for me?

I went back to 1.5.1 and couldn’t use it either

:\anaconda3\lib\site-packages\torch\cuda\nccl.py:24: UserWarning: PyTorch is not compiled with NCCL support

warnings.warn('PyTorch is not compiled with NCCL support')

0%| | 0/12097 [00:06<?, ?it/s]

Traceback (most recent call last):

File "main.py", line 188, in <module>

fire.Fire()

File "d:\anaconda3\lib\site-packages\fire\core.py", line 138, in Fire

component_trace = _Fire(component, args, parsed_flag_args, context, name)

File "d:\anaconda3\lib\site-packages\fire\core.py", line 468, in _Fire

target=component.__name__)

File "d:\anaconda3\lib\site-packages\fire\core.py", line 672, in _CallAndUpdateTrace

component = fn(*varargs, **kwargs)

File "main.py", line 103, in train

optimizer.step()

File "d:\anaconda3\lib\site-packages\torch\autograd\grad_mode.py", line 15, in decorate_context

return func(*args, **kwargs)

File "d:\anaconda3\lib\site-packages\torch\optim\adam.py", line 107, in step

denom = (exp_avg_sq.sqrt() / math.sqrt(bias_correction2)).add_(group['eps'])

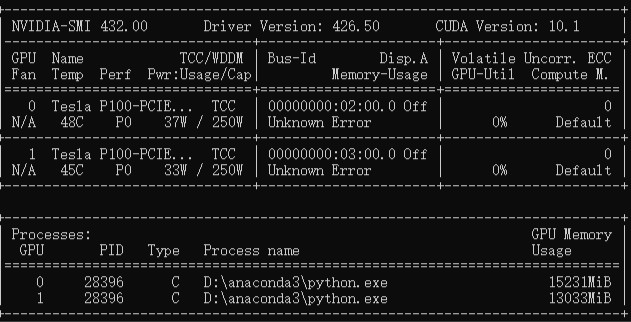

RuntimeError: CUDA out of memory. Tried to allocate 2.18 GiB (GPU 0; 15.92 GiB total capacity; 13.74 GiB already allocated; 666.81 MiB free; 14.59 GiB reserved in total by PyTorch)

I would be very grateful

thank you

is anybody there , i need help。