Hello. I have trouble while using PyTorch. CUDA is properly configured, but not using by PyTorch for some reason.

Yes, problem is not new, I’ve seen a lot of discussions on that topic, but didn’t get the answer.

I’ll be short.

I’m learning PyTorch now, so basically I’m just rewriting this code, and having troubles with CUDA: 03. PyTorch Computer Vision - Zero to Mastery Learn PyTorch for Deep Learning

PyTorch+CUDA version:

print(torch.__version__)

device = torch.device('cuda') if torch.cuda.is_available() else "cpu"

device

2.5.1+cu118

device(type='cuda')

Device is available:

torch.cuda.is_available(), torch.cuda.device_count()

(True, 1)

Also I specified device(cuda) in data and model:

print(next(model_0.parameters()).device)

cuda:0

X, y = X.to(device), y.to(device)

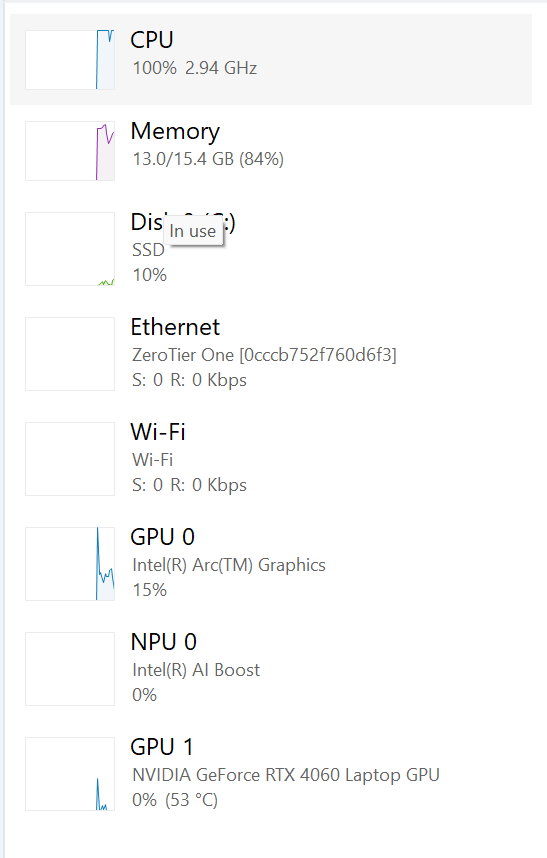

So, in the model training pure CPU usage:

My GPU properties, on the model training time:

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 561.00 Driver Version: 561.00 CUDA Version: 12.6 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Driver-Model | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce RTX 4060 ... WDDM | 00000000:01:00.0 Off | N/A |

| N/A 64C P8 3W / 42W | 205MiB / 8188MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 19996 C ...rograms\Python\Python310\python.exe N/A |

+-----------------------------------------------------------------------------------------+

Looking for your advices and thank you very much!