Note, when I’m running a test, I’m using this script: examples/mnist/main.py at main · pytorch/examples · GitHub

with no arguments, only python main.py

I’m currently on windows, and I’m installing PyTorch in a sandboxed anaconda environment python 3.6 with this command: conda install pytorch torchvision cudatoolkit=10.0 -c pytorch

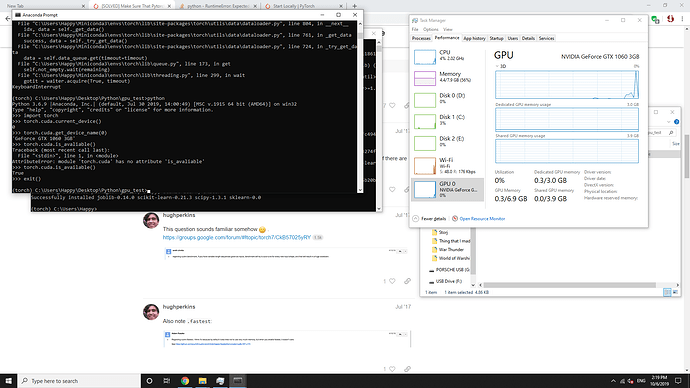

Here is the screenshot showing that my gpu is detected:

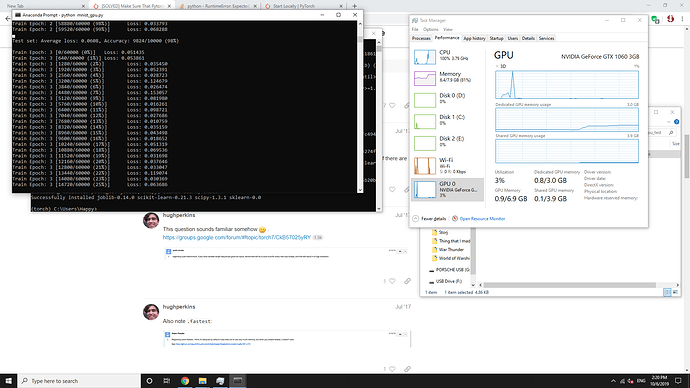

And here is a screenshot showing that the cpu is being fully used and the gpu is only being used for Vram, not the actual compute:

How do I fix this issue? Am I installing with the wrong command?