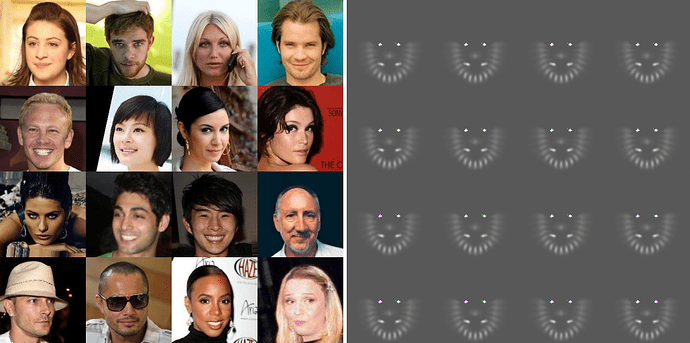

now i’m making vae network consist of 2 encoder and 3 decoder for face, landmark, mask

in using loss function i have some problem

i want to use L1Loss and BCELoss with mean reduction

but when use mean reduction, output is all same for different input image

so i use sum reduction and it has an correct output for different input image

is an restriction for using mean reduction??

below is my loss function and network code

L1Loss = nn.L1Loss(reduction='mean').to(device)

CELoss = nn.BCELoss(reduction='mean').to(device)

kld_criterion = KLDLoss(reduction='mean').to(device)

rec_m, (rec_f, mean_f, logvar_f), (rec_l, mean_l, logvar_l) = model(origin)

# Calc loss

lm_loss = CELoss(rec_l, lm)

f_loss = L1Loss(rec_f, f)

m_loss = CELoss(rec_m, m)

lm_kld_loss = kld_criterion(mean_l, logvar_l)

f_kld_loss = kld_criterion(mean_f, logvar_f)

loss = 4000*(f_loss + m_loss) + 30 * (lm_kld_loss + f_kld_loss) + 2000 * lm_loss

class VAE_NET(nn.Module):

def __init__(self, nc=3, ndf=32, nef=32, nz=128, isize=128, device=torch.device("cuda:0"), is_train=True):

super(VAE_NET, self).__init__()

self.nz = nz

# Encoder

self.l_encoder = Encoder(nc=nc, nef=nef, nz=nz, isize=isize, device=device)

self.f_encoder = Encoder(nc=nc, nef=nef, nz=nz, isize=isize, device=device)

# Decoder

self.l_decoder = Decoder(nc=nc, ndf=ndf, nz=nz, isize=isize)

self.m_decoder = Decoder(nc = nc, ndf = ndf, nz = nz * 2, isize = isize)

self.f_decoder = Decoder(nc = nc, ndf = ndf, nz = nz * 2, isize = isize)

if is_train == False:

for param in self.encoder.parameters():

param.requires_grad = False

for param in self.decoder.parameters():

param.requires_grad = False

def forward(self, x):

latent_l, mean_l, logvar_l = self.l_encoder(x)

latent_f, mean_f, logvar_f = self.f_encoder(x)

concat_latent = torch.cat((latent_l, latent_f), 1)

rec_l = self.l_decoder(latent_l)

rec_m = self.m_decoder(concat_latent)

rec_f = self.f_decoder(concat_latent)

return rec_m, (rec_f, mean_f, latent_f), (rec_l, mean_l, latent_l)

please let me know how to use mean reduction