Hi,

Essentially I used to use keras to train a VGG M2048 similar model and I never even had a hint of memory issues. But I tried using a similar model in pytorch and I keep running out of memory. Its seems to be related to the FC (“classifier”) block and I needed to reduce this to be at all usable (i.e. number of, and size of, dense layers). But makes it hard for me to make a comparison.

Heres the pytorch model summary:

VGG(

(features): Sequential(

(0): Conv2d(2, 96, kernel_size=(7, 7), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace)

(2): MaxPool2d(kernel_size=(2, 2), stride=(2, 2), dilation=(1, 1), ceil_mode=False)

(3): Conv2d(96, 256, kernel_size=(5, 5), stride=(1, 1), padding=(1, 1))

(4): ReLU(inplace)

(5): MaxPool2d(kernel_size=(2, 2), stride=(2, 2), dilation=(1, 1), ceil_mode=False)

(6): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(7): ReLU(inplace)

(8): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(9): ReLU(inplace)

(10): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace)

(12): MaxPool2d(kernel_size=(2, 2), stride=(2, 2), dilation=(1, 1), ceil_mode=False)

)

(classifier): Sequential(

(0): Dropout(p=0.5)

(1): Linear(in_features=373248, out_features=4096, bias=True)

(2): ReLU(inplace)

(3): Dropout(p=0.5)

(4): Linear(in_features=4096, out_features=2048, bias=True)

(5): ReLU(inplace)

(6): Linear(in_features=2048, out_features=101, bias=True)

)

)

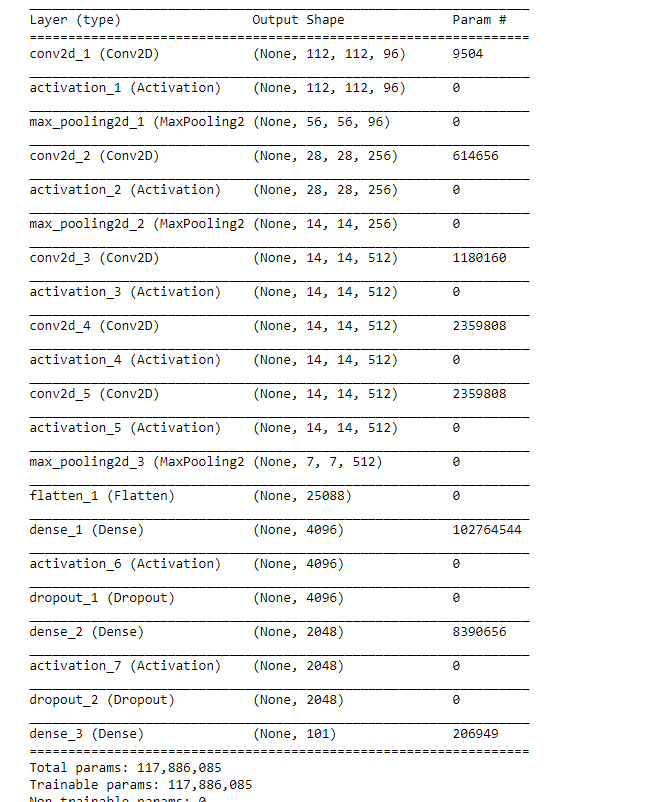

and here’s the keras equivalant model: