The entire code

import torch

import numpy as np

import matplotlib.pyplot as plt

import os

from scipy import signal

import math

import scipy

import sympy as sym

os.environ["KMP_DUPLICATE_LIB_OK"] = "TRUE"

f_s = 48000

f_c = 16000

f_l = 19000

f_r = 17000

b = f_l - f_r

n_order = 5

cut_off = 10

print(torch.__version__)

def high_pass_filter():

# generate a random signal

array_list = np.random.randn(1, 100, 1000)

tensor_array = torch.from_numpy(array_list)

reshape_tensor = tensor_array.reshape(2, 10, -1)

reshape_img = reshape_tensor.reshape(-1)

# compute the fft

fft_signal = torch.fft.fft(reshape_tensor)

print(fft_signal)

reshape_fft_signal = fft_signal.reshape(-1)

normalize_cutoff = (((f_c - b)/cut_off) / 2) / (f_s/2) # normalize cut_off

print("normalize : ", normalize_cutoff)

down = math.sqrt(1 + ((f_c / normalize_cutoff)**(2*n_order)))

print("down reponse : ", down)

butterworth = torch.tensor(1 / down)

G = torch.mul(butterworth, fft_signal)

G_reshape = G.reshape(-1)

output_filter = torch.fft.ifft2(G)

# print(output_filter)

output_filter_reshape = output_filter.reshape(-1)

# plt.figure()

# plt.plot(reshape_img)

# plt.figure()

# plt.plot(reshape_fft_signal)

# plt.figure()

# plt.plot(G_reshape)

# plt.figure()

# plt.plot(output_filter_reshape)

# plt.show()

return output_filter

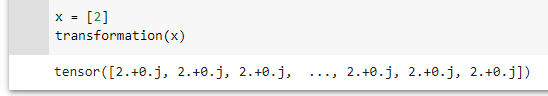

def transformation(x, real_sigma_interval=torch.arange(-1, 1 + 0.001, 0.001)):

x = x

d = []

for sigma in real_sigma_interval:

exp = torch.exp(sigma * torch.tensor(range(len(x))))

exp /= torch.sum(exp)

# Option 1

out_1 = exp.view(2, 1, 1) * x

# Option 2

out_2 = torch.einsum('i, ijk->ijk', [exp, x])

# Same result

# print(torch.all(out_1 == out_2))

# exponentiated_signal = exp * x.T

d.append(out_1)

return torch.tensor([torch.fft.rfft(k) for k in d])

if __name__ == '__main__':

signal = high_pass_filter()

print("X signal : ", signal)

print(signal.shape)

l = transformation(signal)

Here is my X dataset :

tensor([[[ 3.4059e-30+1.0762e-46j, 4.8306e-30-1.9032e-46j,

3.6210e-30-9.2822e-46j, ...,

-3.9725e-30-1.3303e-46j, 3.0239e-30-6.3144e-46j,

-3.3859e-30+5.0432e-46j],

[ 1.2419e-29+5.6312e-30j, 1.7369e-31+3.3395e-30j,

-5.4256e-30-1.0258e-30j, ...,

1.9680e-30+8.6273e-30j, 1.7434e-30-2.6541e-30j,

7.2166e-30+2.3827e-30j],

[ 1.1664e-30+3.6048e-30j, 5.4630e-30+2.5606e-30j,

-1.0161e-30-1.3846e-30j, ...,

-3.3588e-30+2.0733e-30j, -1.9508e-30-9.3269e-31j,

1.3305e-32-7.5297e-30j],

...,

[ 2.3808e-30+1.4374e-30j, -4.0271e-30+3.6610e-30j,

-7.3671e-30+2.4534e-30j, ...,

2.5958e-30-3.9952e-30j, 4.3275e-30-1.1570e-30j,

-2.1836e-30-4.2820e-30j],

[ 1.1664e-30-3.6048e-30j, 5.4630e-30-2.5606e-30j,

-1.0161e-30+1.3846e-30j, ...,

-3.3588e-30-2.0733e-30j, -1.9508e-30+9.3269e-31j,

1.3305e-32+7.5297e-30j],

[ 1.2419e-29-5.6312e-30j, 1.7369e-31-3.3395e-30j,

-5.4256e-30+1.0258e-30j, ...,

1.9680e-30-8.6273e-30j, 1.7434e-30+2.6541e-30j,

7.2166e-30-2.3827e-30j]],

[[-1.0756e-30+1.9013e-46j, 1.2508e-29-3.5579e-46j,

4.4410e-30-3.9580e-46j, ...,

5.8015e-30-7.1195e-46j, -4.0934e-30+2.1252e-45j,

1.0737e-29+2.8130e-45j],

[-6.4574e-30+8.5894e-30j, -2.3886e-31+9.6275e-30j,

-1.6730e-30-6.5154e-30j, ...,

-2.2550e-30-1.3787e-30j, 2.2739e-30-2.2057e-30j,

-7.7044e-31+1.0369e-30j],

[ 7.7674e-31+1.1331e-31j, -2.0076e-30+4.8946e-31j,

-1.1086e-30-3.7737e-30j, ...,

5.4034e-30+3.3697e-30j, -2.9261e-31-3.7502e-30j,

-6.2307e-31+2.5731e-30j],

...,

[-4.2135e-30-1.2409e-31j, -5.0822e-30-1.0209e-30j,

-4.2199e-30+2.1307e-30j, ...,

-8.1933e-30+3.8817e-30j, -1.7231e-30+8.7054e-31j,

-5.1156e-30+7.2661e-31j],

[ 7.7674e-31-1.1331e-31j, -2.0076e-30-4.8946e-31j,

-1.1086e-30+3.7737e-30j, ...,

5.4034e-30-3.3697e-30j, -2.9261e-31+3.7502e-30j,

-6.2307e-31-2.5731e-30j],

[-6.4574e-30-8.5894e-30j, -2.3886e-31-9.6275e-30j,

-1.6730e-30+6.5154e-30j, ...,

-2.2550e-30+1.3787e-30j, 2.2739e-30+2.2057e-30j,

-7.7044e-31-1.0369e-30j]]], dtype=torch.complex128)

shape : torch.Size([2, 10, 5000])