Hey there,

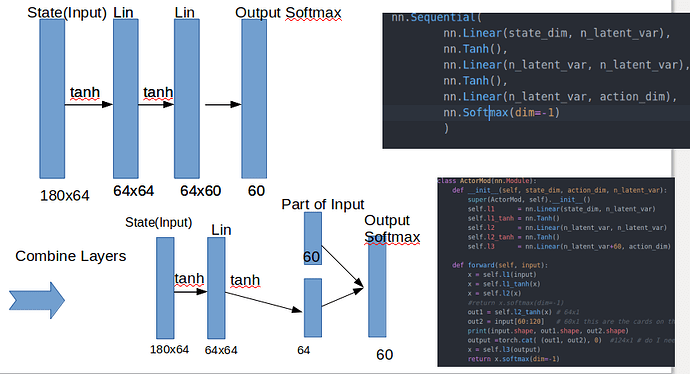

I would like to change my nn.module to have multiple inputs before the final softmax output layer:

I read that sequential is not working for for multiple inputs, that is why I used a seperate module and forward see picture:

Now what currently does not work is that in my sequential I can pass in state input vectors of torch.Size([180]) or torch.Size([1999, 180]) or any other 2d tensor torch.Size([n, 180])

However in my seperate module I get this error if I try to pass in a 2d tensor of torch.Size([n, 180])

Traceback (most recent call last):

File "ppo_witches.py", line 289, in <module>

main()

File "ppo_witches.py", line 257, in main

reward_mean, wrong_moves = ppo.update(memory)

File "ppo_witches.py", line 168, in update

logprobs, state_values, dist_entropy = self.policy.evaluate(old_states, old_actions)

File "ppo_witches.py", line 120, in evaluate

action_probs = self.action_layer(state)

File "/home/mlamprecht/Documents/mcts_cardgame/my_env/lib/python3.6/site-packages/torch/nn/modules/module.py", line 532, in __call__

result = self.forward(*input, **kwargs)

File "ppo_witches.py", line 71, in forward

output =torch.cat( (out1, out2), 0)

RuntimeError: invalid argument 0: Sizes of tensors must match except in dimension 0. Got 64 and 180 in dimension 1 at /pytorch/aten/src/TH/generic/THTensor.cpp:612

Does anyone of you know a smart way of solving this issue?