I have x = nn.linear() following x=conv2d()

I understand in_features of linear() must be calculated from x. by calculating channels * height * width of x.

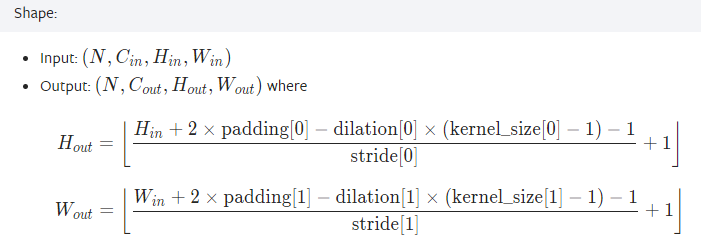

I know from, the documentation for conv2d, this stuff I dont get:

I dont follow this post on nn.Conv2d output comptation), but it says you can get dimensions of x with x.shape.

I still dont understand how to apply those equations from the documentation of nn.conv.

I know:

- The input features are the pixel size (i.e.: h*w) of the image * channels and

- pixel size (H*W) of your image after applying Conv2d comes from the above equations, but I dont understand it. Are we just defining notation in the input: and output: parts?

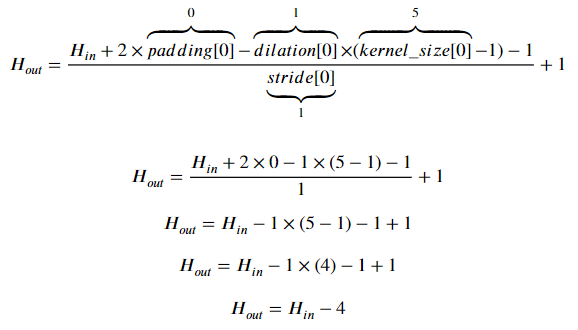

Then it gives formulas for H_out and W_out, the height and width of the image output from conv2d based on H_in and W_in, the height and width of image input to conv2D, respectively. I don’t understand how to apply those equations.

- how do I get H_in and W_in? Would those be x[2] and x[3] based on the assumptions that what comes after input: and ouput: are the dimensions of x. I.e.: N and the number of channels are the first two dimensions.

Assuming:

- we are using x as it is after the line just before linear() and

- input and output above show conv2D’s input and output orders of dimensions and the notation for the equations.

- Are padding, dilation, and kernel_size taken from the parameters values of the preceding conv2D call? If the preceding call is:

self.conv2 = nn.Conv2d(6, 16, 5)

This means:self.conv2 = nn.Conv2d(in_channels = 6, out_channels = 16, kernel_size = 5)kernel size is 5, what is kernel_size[0] and where to I get padding and dilation?

Then, say we have a call to nn.Linear afterward. It’s in_features parameter would be C * H_{out} * W_{out}, where:

C = the out_channels parameter used in the prior conv2D call?

The current sad state of the code:

class Net(nn.Module):

def __init__(self):

super().__init__()

# Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode='zeros', device=None, dtype=None)

self.conv1 = nn.Conv2d(in_channels = 1, out_channels = 6, kernel_size = 5)

self.conv2 = nn.Conv2d(in_channels = 6, out_channels = 16, kernel_size = 5)

self.pool = nn.MaxPool2d(2, 2)

# To decide the number of input features your function can take, you first need to figure out what is the output pixel value of your final conv2d.

# Let's say your final conv2d function returns a 3x3 pixel image with output channels as 50, in such case the input features to your linear function should be 3x3x50

# and you can have any number of output features.

#calculate H & W of self.conv2 output from

#I GOT STUCK HERE. I WENT TO TUESDAY OFFICE HOURS 5 MIN LATE AND NO ONE WAS THERE

#I DIDN'T GET AN ANSWER TO MY POST #1032 ABOUT POST #815

# print(self.conv2.shape)

# It seems self.conv2 has no shape attribute.

# H_in =

# H =

# W =

#Linear(in_features, out_features, bias=True, device=None, dtype=None)

#self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc1 = nn.Linear(16 * H * W, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = torch.flatten(x, 1) # flatten all dimensions except batch

x = F.relu(self.fc1(x)) #relu is an activation function

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

net = Net()