Hello,

I am getting the error when I run the following-

>> x = torch.tensor(1).cuda()

>> AssertionError: Torch not compiled with CUDA enabled

I know that this is a known issue but none of the online solutions have worked for me. Following is the details of my setup:

nvidia-smi

Sun Mar 12 22:59:10 2023

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 530.30.02 Driver Version: 530.30.02 CUDA Version: 12.1 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 Tesla V100-SXM2-16GB On | 00000000:00:04.0 Off | 0 |

| N/A 36C P0 33W / 300W| 0MiB / 16384MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 1 Tesla V100-SXM2-16GB On | 00000000:00:05.0 Off | 0 |

| N/A 35C P0 32W / 300W| 0MiB / 16384MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| No running processes found |

+---------------------------------------------------------------------------------------+

nvcc -V gives

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2023 NVIDIA Corporation

Built on Tue_Feb__7_19:32:13_PST_2023

Cuda compilation tools, release 12.1, V12.1.66

Build cuda_12.1.r12.1/compiler.32415258_0

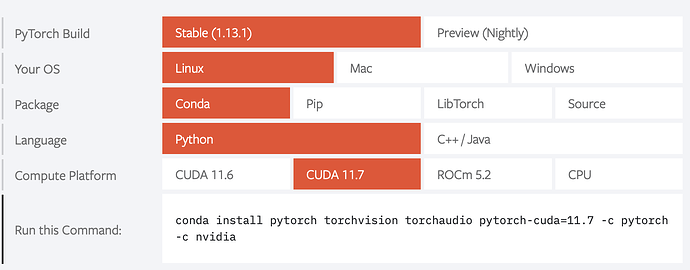

I have installed PyTorch from the PyTorch website using -

conda install pytorch torchvision torchaudio pytorch-cuda=11.7 -c pytorch -c nvidia

I have pytorch-cuda installed in my env -

conda list | grep cuda gives -

cuda 11.7.1 0 nvidia

cuda-cccl 11.7.91 0 nvidia

cuda-command-line-tools 11.7.1 0 nvidia

cuda-compiler 11.7.1 0 nvidia

cuda-cudart 11.7.99 0 nvidia

cuda-cudart-dev 11.7.99 0 nvidia

cuda-cuobjdump 11.7.91 0 nvidia

cuda-cupti 11.7.101 0 nvidia

cuda-cuxxfilt 11.7.91 0 nvidia

cuda-demo-suite 12.1.55 0 nvidia

cuda-documentation 12.1.55 0 nvidia

cuda-driver-dev 11.7.99 0 nvidia

cuda-gdb 12.1.55 0 nvidia

cuda-libraries 11.7.1 0 nvidia

cuda-libraries-dev 11.7.1 0 nvidia

cuda-memcheck 11.8.86 0 nvidia

cuda-nsight 12.1.55 0 nvidia

cuda-nsight-compute 12.1.0 0 nvidia

cuda-nvcc 11.7.99 0 nvidia

cuda-nvdisasm 12.1.55 0 nvidia

cuda-nvml-dev 11.7.91 0 nvidia

cuda-nvprof 12.1.55 0 nvidia

cuda-nvprune 11.7.91 0 nvidia

cuda-nvrtc 11.7.99 0 nvidia

cuda-nvrtc-dev 11.7.99 0 nvidia

cuda-nvtx 11.7.91 0 nvidia

cuda-nvvp 12.1.55 0 nvidia

cuda-runtime 11.7.1 0 nvidia

cuda-sanitizer-api 12.1.55 0 nvidia

cuda-toolkit 11.7.1 0 nvidia

cuda-tools 11.7.1 0 nvidia

cuda-visual-tools 11.7.1 0 nvidia

cudatoolkit 11.3.1 ha36c431_9 nvidia

pytorch-cuda 11.7 h67b0de4_1 pytorch

pytorch-mutex 1.0 cuda pytorch

python -m torch.utils.collect_env gives

Collecting environment information...

PyTorch version: 1.12.1

Is debug build: False

CUDA used to build PyTorch: Could not collect

ROCM used to build PyTorch: N/A

OS: Debian GNU/Linux 11 (bullseye) (x86_64)

GCC version: (Debian 10.2.1-6) 10.2.1 20210110

Clang version: Could not collect

CMake version: version 3.18.4

Libc version: glibc-2.31

Python version: 3.10.9 (main, Mar 1 2023, 18:23:06) [GCC 11.2.0] (64-bit runtime)

Python platform: Linux-5.10.0-21-cloud-amd64-x86_64-with-glibc2.31

Is CUDA available: False

CUDA runtime version: 12.1.66

GPU models and configuration:

GPU 0: Tesla V100-SXM2-16GB

GPU 1: Tesla V100-SXM2-16GB

Nvidia driver version: 530.30.02

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.23.5

[pip3] numpydoc==1.5.0

[pip3] torch==1.12.1

[pip3] torchaudio==0.12.1

[pip3] torchvision==0.13.1

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.3.1 ha36c431_9 nvidia

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py310h7f8727e_0

[conda] mkl_fft 1.3.1 py310hd6ae3a3_0

[conda] mkl_random 1.2.2 py310h00e6091_0

[conda] numpy 1.23.5 py310hd5efca6_0

[conda] numpy-base 1.23.5 py310h8e6c178_0

[conda] numpydoc 1.5.0 py310h06a4308_0

[conda] pytorch 1.12.1 cpu_py310hb1f1ab4_1

[conda] pytorch-cuda 11.7 h67b0de4_1 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchaudio 0.12.1 py310_cu113 pytorch

[conda] torchvision 0.13.1 py310_cu113 pytorch

System information is the following -

Static hostname: debian

Transient hostname: megh-gpu-2-v100s-16-vcpus

Icon name: computer-vm

Chassis: vm

Machine ID: 52d373a32c64a329a0d690441b0f70a2

Boot ID: 844478b6a51748d98fa0725631603484

Virtualization: kvm

Operating System: Debian GNU/Linux 11 (bullseye)

Kernel: Linux 5.10.0-21-cloud-amd64

Architecture: x86-64

I am guessing maybe the issue is due to some version mismatch, but I am not sure why - since I have cuda 12 on my system and I know that it is backwards compatible.

I would appreciate any help with regards to this, thanks!