I am using the following code from the tutorial : PyTorch Profiler — PyTorch Tutorials 2.2.0+cu121 documentation

import torch

import torchvision.models as models

torch.cuda.empty_cache()

gc.collect()

model = models.resnet18().cuda()

print(model.device)

inputs = torch.randn(5, 3, 224, 224).cuda()

with profile(activities=[ProfilerActivity.CPU, ProfilerActivity.CUDA]) as prof:

model(inputs)

prof.export_chrome_trace("trace.json")

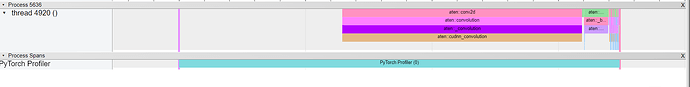

Yet the chrome trace only contains CPU trace and not GPU (while cuda() seems to work properly).

Also my operation conv2d and convolution are described as cpu_op, I think it should be on gpu right ?

Can anyone explain why I have a different output, I would expect the GPU trace to be there.

Thank you !