Hi,

My system is RTX 2080Ti * 8 and it was Turing architecture, So I have to use ncu instead of nvprof.

When I running the PyTorch with metric of ncu, If i just running the one GPU, they profile the kernel exactly what I want to. But if I running on the multi-GPU, it may be called ncclAllReduce, they cannot profile and stop before the start the PyTorch imagenet.

Can i ask why it cannot profile the imagent in multi-GPU, or recommend any else profiler…

I want to know how to profile in ncu or nvprof.

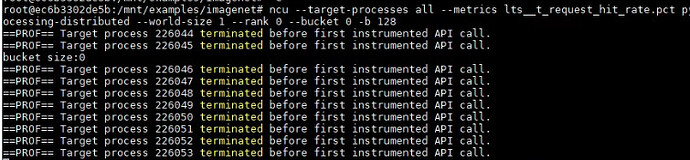

Below figure are screen shot about stopped imagenet in multi-GPU.

Thanks