I’m following this example on doc

In [42]: x = torch.tensor([1,2,3])

In [45]: x.repeat(4,2)

Out[45]: tensor([[1, 2, 3, 1, 2, 3],

[1, 2, 3, 1, 2, 3],

[1, 2, 3, 1, 2, 3],

[1, 2, 3, 1, 2, 3]])

In [46]: x.repeat(4,2).shape

Out[46]: torch.Size([4, 6])

So far, so good.

But why does repeating just 1 time on 3rd dimension expand 3rd dim to 3 (not 1)?

[On the doc]

>>> x.repeat(4, 2, 1).size()

torch.Size([4, 2, 3])

Double checking.

In [43]: x.repeat(4,2,1)

Out[43]:

tensor([[[1, 2, 3],

[1, 2, 3]],

[[1, 2, 3],

[1, 2, 3]],

[[1, 2, 3],

[1, 2, 3]],

[[1, 2, 3],

[1, 2, 3]]])

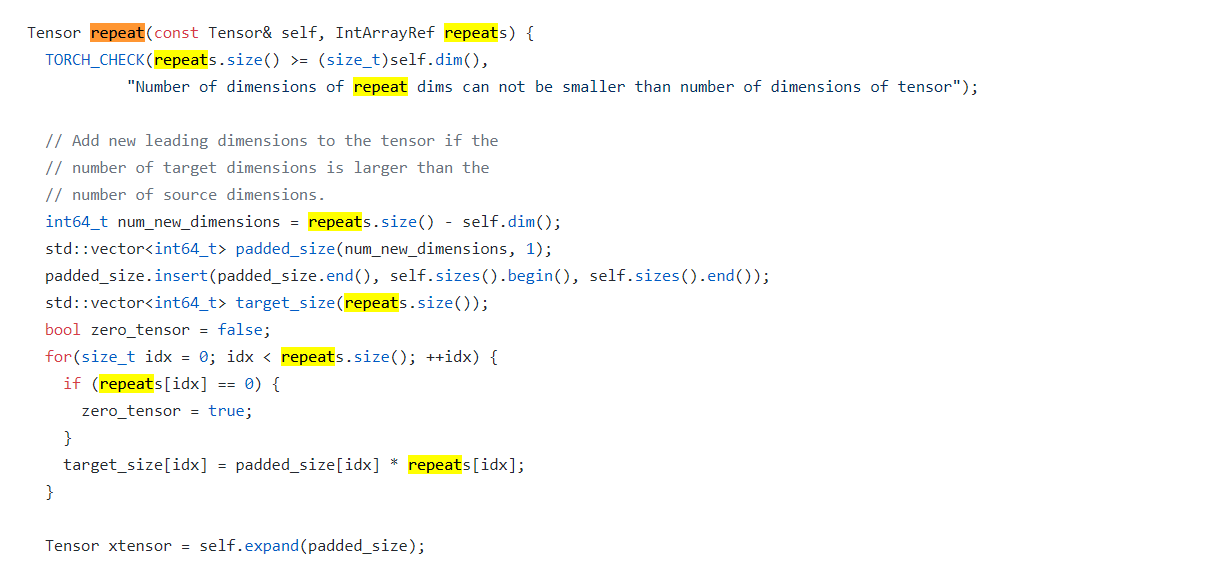

Why does it behave this way?