I read about RNN in pytorch:

RNN — PyTorch 2.1 documentation.

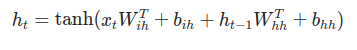

According to the document the RNN run the following function:

I looked on another RNN example (from pytorch tutorial):

NLP From Scratch: Classifying Names with a Character-Level RNN — PyTorch Tutorials 2.2.0+cu121 documentation.

And they implemented RNN as:

import torch.nn as nn

class RNN(nn.Module):

def __init__(self, input_size, hidden_size, output_size):

super(RNN, self).__init__()

self.hidden_size = hidden_size

self.i2h = nn.Linear(input_size + hidden_size, hidden_size)

self.i2o = nn.Linear(input_size + hidden_size, output_size)

self.softmax = nn.LogSoftmax(dim=1)

def forward(self, input, hidden):

combined = torch.cat((input, hidden), 1)

hidden = self.i2h(combined)

output = self.i2o(combined)

output = self.softmax(output)

return output, hidden

def initHidden(self):

return torch.zeros(1, self.hidden_size)

n_hidden = 128

rnn = RNN(n_letters, n_hidden, n_categories)

-

Why the implemented function is different from the equation ?

(The function doesn’t contains softmax and it does contain bias which is not shown in the code) -

Why the code dosn’t use tanh as shown in the equation ?