Hi,

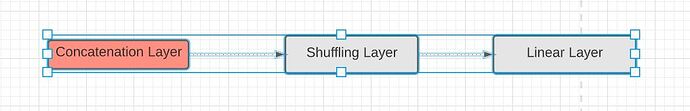

I am trying to concatenate the activation from n number of clients before passing to my host-DNN model. How can I build the shuffling layer after the concatenation layer at my host-DNN model so that it can shuffle the activation coming from the clients?

Also, while getting the gradients back from host-DNN, gradients should be unshuffled and passed back.

Should we also implement a backward function for the shuffle layer or it will be taken care by autograd?

@ptrblck : Apologies for tagging you to this issue but I found your amazing answers over the forum and I couldn’t help myself to not to do that. Can you please provide me some pointers?

I’m unsure what the ShufflingLayer would exactly do, but assuming you would permute the input activations, you could most likely write a custom nn.Module and apply e.g.:

def forward(self, x):

x = x[:, torch.randperm(x.size(1))]

return x

to shuffle the data in dim1.

Thanks @ptrblck. In my scenario, host-DNN gets avtication from different clients and at very first layer, concatenation of activation vectors from different client is stacked together. At second layer shuffling of activation is done. Now shuffling of activation should be done wrt to labels received from the clients.