Hi,

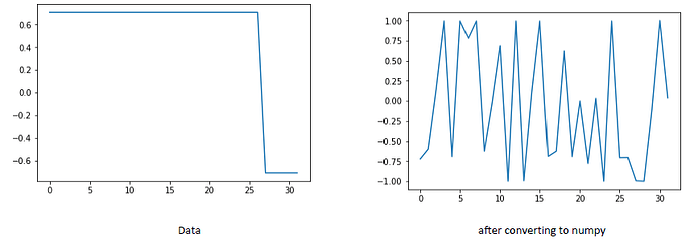

I am using a simple train loop for a regression task. To make sure that regression ground-truth values are the same as what I expect in the training loop, I decided to plot each batch of data.

However, I see that when I convert the data loader’s tensor to numpy array and plot it, it is disturbed. I am using myTensor.data.cpu().numpy() for the conversion.

My code is as below:

train_ds = TensorDataset(x_train, y_train)

train_dl = DataLoader(train_ds, batch_size = 32, shuffle = True, num_workers = 0, drop_last = True)

for epoch in range(epochs):

model.train()

for i, (x, y) in enumerate(train_dl):

x = x.cuda()

y = y.cuda()

yy = y.data.cpu().numpy()

pyplot.plot(yy[0:32,0])

pyplot.show()

...