Hi,

I’m using pytorch (latest available for Jetson AGX Xavier and JetPack 5.1: https://docs.nvidia.com/frameworks/install-pytorch-jetson-platform/index.html) and torchvision installed via pip (python3 -m pip install torchvision) on Jetson AGX Xavier Developer Kit 32GB platform.

I’m getting an error informing me of incompatibility between these two packages.

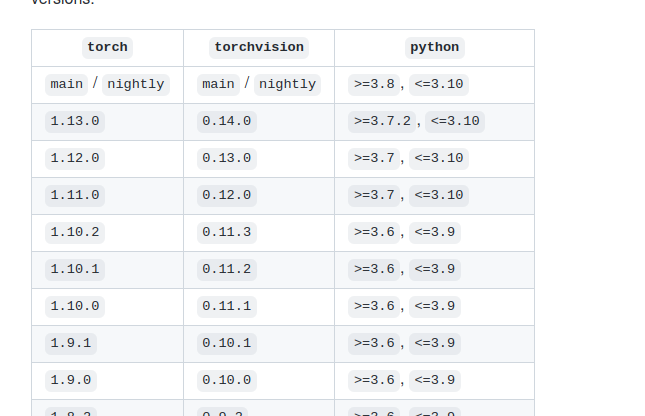

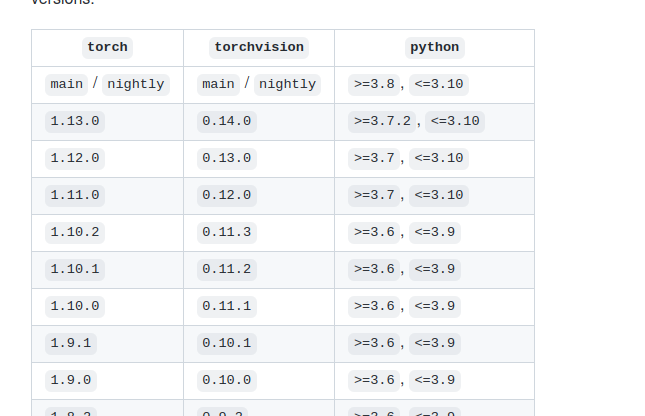

According to the torchvision compatibility chart:

if I built both the packages from main on github repo on my platform, they should work fine together right?

I’m also concerned that if I do this, the performance won’t be as good as the NVIDIA provided pytorch package.

Any feedback is appreciated.

Yes, you can build PyTorch and torchvision from source and both should be compatible. Could you post the error message you are seeing?

Sure, here goes:

RuntimeError: Couldn’t load custom C++ ops. This can happen if your PyTorch and torchvision versions are incompatible, or if you had errors while compiling torchvision from source. For further information on the compatible versions, check [GitHub - pytorch/vision: Datasets, Transforms and Models specific to Computer Vision ](https://github.com/pytorch/vision#installation) for the compatibility matrix. Please check your PyTorch version with torch.**version** and your torchvision version with torchvision.**version** and verify if they are compatible, and if not please reinstall torchvision so that it matches your PyTorch install.

The PyTorch version is 1.14, the latest one for Jetson AGX Xavier. Torchvision versions I tried so far are:

Haven’t gone so far as to building the libraries from source, because I believe this could have worse performance than the NVIDIA-provided package, but I will try eventually if nothing else works.

I also looked into the custom c++ ops thing and it seems like a possible issue, but then I’m not really doing anything out of the ordinary - I’m using yolov5, so there should be no problems there.

The error is always the same.

This shouldn’t be the case as we are upstreaming improvements if possible.

I’m a bit confused since you have previously mentioned to build from upstream/master: