I have this project file using RoBERTa to create a model about multiclass text classification. Before I tested on my own laptop, I’m using google colab. It only took about 20-30 minutes for training. Then you know, it’s free version so I’ve reach my usage limit.

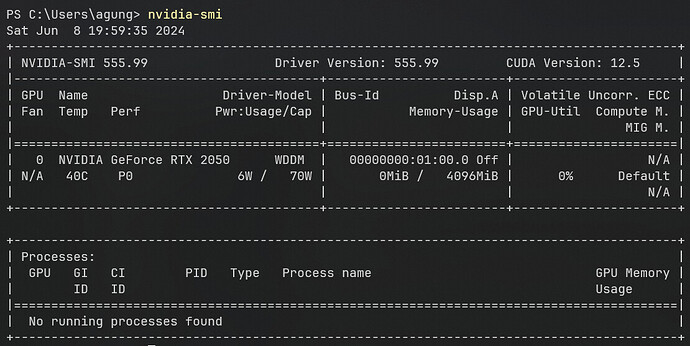

After that I’m installing jupyterlab & pytorch cuda version 12.1 on my laptop. I didn’t install cuda toolkit from nvidia website. Then I run it, it works. But when training my model, it says approximately 6-7 hours. Is that normal? Or I have some settings/configs wrong. Tbh my gpu laptop isn’t that good too. You know google colab has Nvidia Tesla T4 and my laptop was RTX 2050. But the difference is so big, I tried to use my cpu instead gpu then it says approximately 12 hours.

Please let me know if this normal or there’s something isn’t right. I have knowledge in machine learning before, but for NLP this is my first time