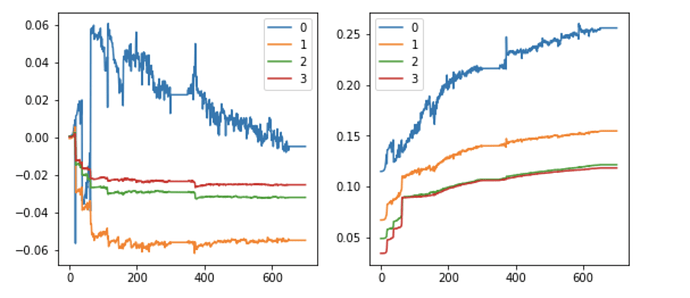

I was seeing how the means and standard deviations of the weights of a CNN change as the model progresses and observed that the standard deviations of the later layers is initilzed much less that the first by default:

Left is the means and right is the stds

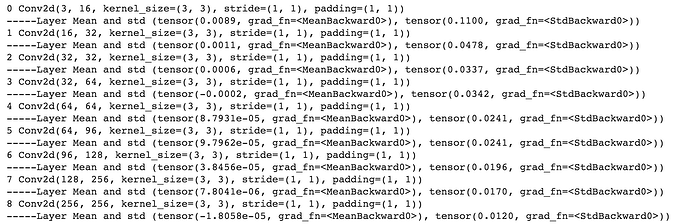

This was for a small 4 layer network, even when I made a larger network I observed the same, that the later layers were intilizied to have much lower variance:

Usually the standard deviation of the second layer is half that of the first layer

As you can see the stds systematically decrease. Can someone explain why this is done? I know we want our ouputs to have mean 0 and std 1 but how is this helping that?

Code:

Create any CNN in pytorch and then run

model=Conv()

def stats(x): return x.mean(),x.std()

for i,e in enumerate(model.children()):

print(i,e)

print('-----Layer Mean and std',stats(e.weight))

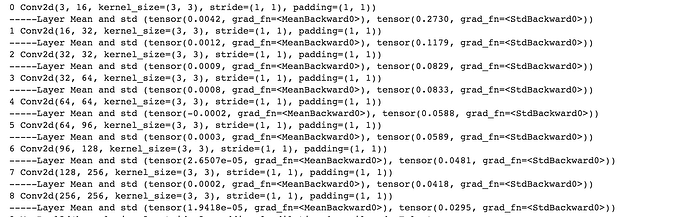

Update

Even with I manually initilise the layers I get the same behavior!

model=Conv()

for l in model.children():

try:

init.kaiming_normal_(l.weight)

l.bias.data.zero_()

except:

pass

def stats(x): return x.mean(),x.std()

for i,e in enumerate(model.children()):

print(i,e)

print('-----Layer Mean and std',stats(e.weight))

This is pretty unsettling! pytorch is keeping track of the layer index. Why is this happening?