I have 6 classes of images; class A = 979 samples, class B = 1002 samples, class C = 322 samples, class D = 896 samples, class E = 1255 samples and class F = 1517 samples. Here is the code.

import torch

from torchvision import datasets, transforms, models

from torch import nn, optim

import torch.nn.functional as F

from torch.autograd import Variable

from torch.utils.data.sampler import SubsetRandomSampler

import numpy as np

from PIL import Image

# import cv2

train_on_gpu = torch.cuda.is_available()

if not train_on_gpu:

print('CUDA is not available. Training on CPU ...')

else:

print('CUDA is available. Training on GPU ...')

my_directory= 'UTinteraction_useful/train'

train_transform = transforms.Compose([transforms.ToTensor(),

transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))])

train_data = datasets.ImageFolder(my_directory, transform=train_transform)

num_train = len(train_data)

indices = list(range(num_train))

np.random.shuffle(indices)

split = int(np.floor(0.2 * num_train))

train_idx, valid_idx = indices[split:], indices[:split]

train_sampler = SubsetRandomSampler(train_idx)

valid_sampler = SubsetRandomSampler(valid_idx)

batch_size_train = 16

batch_size_valid = 9

trainloader = torch.utils.data.DataLoader(train_data, batch_size=batch_size_train, sampler=train_sampler)

validloader = torch.utils.data.DataLoader(train_data, batch_size=batch_size_valid, sampler=valid_sampler)

class Network(nn.Module):

def __init__(self):

super(Network, self).__init__()

self.conv1 = nn.Conv2d(3, 16, 3, padding=1)

self.conv2 = nn.Conv2d(16, 32, 3, padding=1)

self.conv3 = nn.Conv2d(32, 64, 3, padding=1)

self.pool = nn.MaxPool2d(2,2)

self.fc1 = nn.Linear(64*28*28, 500)

self.fc2 = nn.Linear(500, 6)

self.dropout = nn.Dropout(0.25)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = self.pool(F.relu(self.conv3(x)))

x = x.view(-1, 64*28*28)

x = self.dropout(x)

x = F.relu(self.fc1(x))

x = self.fc2(x)

return x

model = Network()

print(model)

# move model to GPU if CUDA us available

if train_on_gpu:

model.cuda()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01)

epochs = 2

valid_loss_min = np.Inf

for epoch in range(epochs):

train_loss = 0.0

valid_loss = 0.0

model.train()

for images, labels in trainloader:

images, labels = Variable(images, requires_grad=True), Variable(labels)

if train_on_gpu:

images, labels = images.cuda(), labels.cuda()

optimizer.zero_grad()

output = model(images)

loss = criterion(output, labels)

loss.backward()

optimizer.step()

train_loss = loss.item()

model.eval()

for images, lables in validloader:

images, labels = Variable(images, requires_grad=True), Variable(labels)

print("Validataion")

print('imags shape',images.shape)

print('labels shape', labels.shape)

print('labels', labels)

if train_on_gpu:

images, labels = images.cuda(), labels.cuda()

output = model(images)

print('output shape', output.shape)

loss = criterion(output, labels)

valid_loss = loss.item()

train_loss = train_loss/len(trainloader)

valid_loss = valid_loss/len(validloader)

print(f'Epoch ({epoch+1}|{epochs}) Training Loss: {train_loss:.6f} Validation Loss: {valid_loss:.6f}')

if valid_loss <= valid_loss_min:

print(f'Validation Loss is decreased from {valid_loss_min:.6f} --> {valid_loss:.6f}. Model is saving...')

torch.save(model.state_dict(), 'model.pt')

valid_loss_min = valid_loss

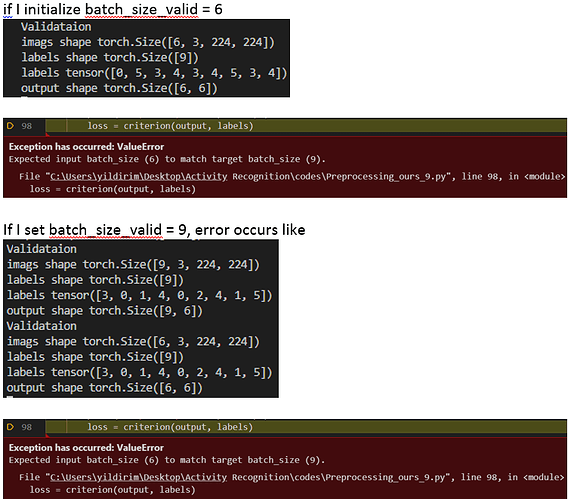

My code has no error during training phase with batch_size_train. However, in validation phase if I initialize batch_size_valid = 6, dataloader automatically selects in code label.shape = 9. Figures shows labels shape & values, and Error at loss.

Please help me. Thanks.