OK, so in the source “code” of the poisson distribution under icdf we find the following:

def icdf(self, value):

"""

Returns the inverse cumulative density/mass function evaluated at

`value`.

Args:

value (Tensor):

"""

raise NotImplementedError

So I tried to implement my own version, and indeed I have, except that it keeps killing the gradient.

In the following, x is an n by N tensor representing a sample of a random vector with n random variables and N samples. Lambda contains N identical column vectors of length n such that Lambda[i, any] is the mean of variable at i. The tensor x is uniformly distributed with mean 1/2 and variance 1/12.

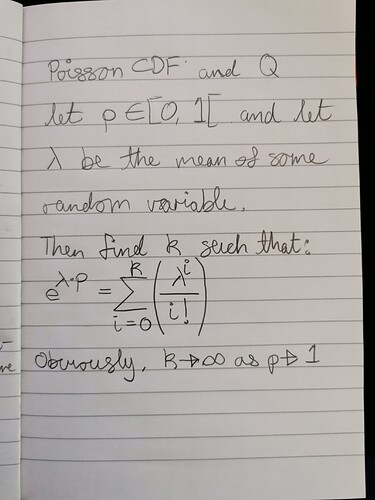

Consider the quantile function Q of the Poisson distribution and compare it to the Taylor-Maclaurin power series for the exponential function. Then for some probability p, k = Q(p).

We can replace factorial of the tensor with the gamma function, but torch has only the log Gamma for some reason, so we need to replace n! with torch.exp(torch.lgamma(n+1)).

So my code does this by incrementing tensor k by Heaviside of the difference between the left and right-hand sides:

k = torch.zeros(n, N)

Taylor = torch.pow(Lambda, k) / torch.exp(torch.lgamma(k + 1))

while torch.sum(torch.exp(Lambda) * x - Taylor) > 0:

k = k + torch.heaviside(torch.sum(torch.exp(Lambda) * x - Taylor))

Taylor = Taylor + torch.pow(Lambda, k) / torch.exp(torch.lgamma(k + 1))

But the grads disappear whenever I use the above procedure in a function.

Some help would be much appreciated! Scipy does have a such a feature, is it possible to use it on the gpu perhaps?

Sincerely,

// Asger Jon Vistisen