Hello,

I am also trying to reproduce the results of the dynamic quantization example provided at (beta) Dynamic Quantization on BERT — PyTorch Tutorials 2.1.1+cu121 documentation

from transformers import BertForSequenceClassification, BertTokenizerFast

import torch

import os

tokenizer = BertTokenizerFast.from_pretrained("bert-base-cased-finetuned-mrpc")

model = BertForSequenceClassification.from_pretrained("bert-base-cased-finetuned-mrpc")

batch_size = 32

sequence_length = 128

vocab_size = len(tokenizer.vocab)

token_ids = torch.randint(vocab_size, (batch_size, sequence_length))

def print_size_of_model(model):

torch.save(model.state_dict(), "temp.p")

print('Size (MB):', os.path.getsize("temp.p")/1e6)

os.remove('temp.p')

quantized_model = torch.quantization.quantize_dynamic(

model, {torch.nn.Linear}, dtype=torch.qint8

)

I then compare the memory footprint and performance of the baseline model and its quantized counterpart:

print_size_of_model(model)

%timeit model(token_ids)

Size (MB): 433.3

2.16 s ± 30.3 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

print_size_of_model(quantized_model)

%timeit quantized_model(token_ids)

Size (MB): 176.8

2.08 s ± 16.4 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

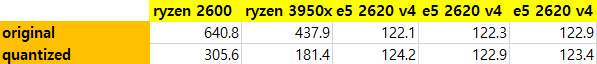

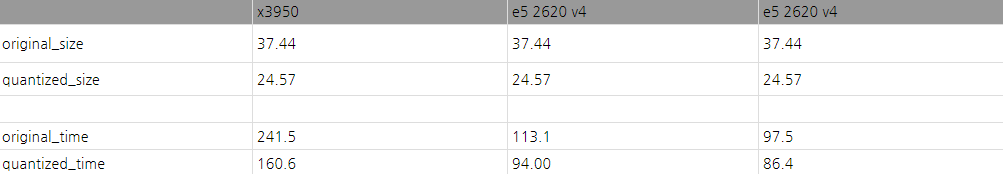

Quantization seems to be working fine based on the significantly reduced memory footprint, but I cannot reproduce the execution speed benefits highlighted in the example (close to 50%)

I am running on CentOS Linux release 7.9.2009 with a Intel(R) Xeon(R) CPU E5-2690 v3.

I am running transformers v4.3.2 with torch v1.7.0. I installed the CUDA 11.0 version of Pytorch but I am not moving the tensors or the models to the GPU in the example provided.

I ran the same example on a notebook with a i5-8350U CPU and could observe a significant speed-up. Could this be linked to an issue with Haswell CPUs?

Detailed configuration:

ATen/Parallel:

at::get_num_threads() : 6

at::get_num_interop_threads() : 3

OpenMP 201511 (a.k.a. OpenMP 4.5)

omp_get_max_threads() : 6

Intel(R) Math Kernel Library Version 2020.0.0 Product Build 20191122 for Intel(R) 64 architecture applications

mkl_get_max_threads() : 6

Intel(R) MKL-DNN v1.6.0 (Git Hash 5ef631a030a6f73131c77892041042805a06064f)

std::thread::hardware_concurrency() : 6

Environment variables:

OMP_NUM_THREADS : [not set]

MKL_NUM_THREADS : [not set]

ATen parallel backend: OpenMP

PyTorch built with:

- GCC 7.3

- C++ Version: 201402

- Intel(R) Math Kernel Library Version 2020.0.0 Product Build 20191122 for Intel(R) 64 architecture applications

- Intel(R) MKL-DNN v1.6.0 (Git Hash 5ef631a030a6f73131c77892041042805a06064f)

- OpenMP 201511 (a.k.a. OpenMP 4.5)

- NNPACK is enabled

- CPU capability usage: AVX2

- CUDA Runtime 11.0

- NVCC architecture flags: -gencode;arch=compute_37,code=sm_37;-gencode;arch=compute_50,code=sm_50;-gencode;arch=compute_60,code=sm_60;-gencode;arch=compute_70,code=sm_70;-gencode;arch=compute_75,code=sm_75;-gencode;arch=compute_80,code=sm_80

- CuDNN 8.0.4

- Magma 2.5.2

- Build settings: BLAS=MKL, BUILD_TYPE=Release, CXX_FLAGS= -Wno-deprecated -fvisibility-inlines-hidden -DUSE_PTHREADPOOL -fopenmp -DNDEBUG -DUSE_FBGEMM -DUSE_QNNPACK -DUSE_PYTORCH_QNNPACK -DUSE_XNNPACK -DUSE_VULKAN_WRAPPER -O2 -fPIC -Wno-narrowing -Wall -Wextra -Werror=return-type -Wno-missing-field-initializers -Wno-type-limits -Wno-array-bounds -Wno-unknown-pragmas -Wno-sign-compare -Wno-unused-parameter -Wno-unused-variable -Wno-unused-function -Wno-unused-result -Wno-unused-local-typedefs -Wno-strict-overflow -Wno-strict-aliasing -Wno-error=deprecated-declarations -Wno-stringop-overflow -Wno-psabi -Wno-error=pedantic -Wno-error=redundant-decls -Wno-error=old-style-cast -fdiagnostics-color=always -faligned-new -Wno-unused-but-set-variable -Wno-maybe-uninitialized -fno-math-errno -fno-trapping-math -Werror=format -Wno-stringop-overflow, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, USE_CUDA=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=ON, USE_MKLDNN=ON, USE_MPI=OFF, USE_NCCL=ON, USE_NNPACK=ON, USE_OPENMP=ON,