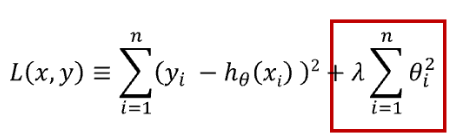

I understand this is the formula for L2 regularization:

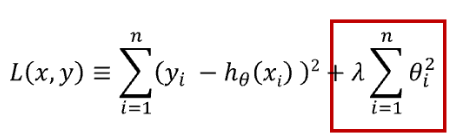

Does weight decay equal lambda in this equation?

I understand this is the formula for L2 regularization:

Does weight decay equal lambda in this equation?

Here lambda is l2-regularization factor. In case of SGD, this value is proportional to weight decay but for other optimizers like Adam this is not the case.

In short, weight decay is something that you subtract from the weight update equation directly.

Then how to add L2 regularization when using Adam?

You don’t. L2 regularization does not work well with the modern optimizers like Adam, weight decay is the option to go.

But if you still want to add l2 regularization just use optim.Adam and provide weight_decay argument in it (pytorch will use that argument for l2 regularization, AdamW solves this). I may be wrong on this as I have not followed the complete AdamW discussion on pytorch.