Hello, everyone. I have build the machine learning code with GPU calculation. It is running, but not fast as I expected. I do not know I have build the correct one.

I read the input data by:

Dataset = pd.read_csv('./Inputs.txt', sep = "\s+")

Target_Column = 9

X = Dataset.iloc[:, 0:Target_Column].values

Y = Dataset.iloc[:, Target_Column].values

X_Train, X_Test, Y_Train, Y_Test = train_test_split(X, Y, test_size = 0.3, random_state = 0)

sc = StandardScaler()

X_Train = sc.fit_transform(X_Train)

X_Test = sc.transform(X_Test)

Then I use DataLoder as follows:

X_Train = torch.Tensor(X_Train)

Y_Train = torch.Tensor(Y_Train)

Train_Dataset = TensorDataset(X_Train, Y_Train)

Train_Dataloader = DataLoader(Train_Dataset, batch_size = Batch_Size, shuffle = True)

Then, when I calculating each iteration, I use following code:

for Epoch in range(Num_Epochs):

#Shuffle just mixes up the dataset between epocs

X_Train, Y_Train = shuffle(X_Train, Y_Train)

# Mini batch learning

for batch_idx, samples in enumerate(Train_Dataloader):

X_Train = X_Train.to(Device)

Y_Train = Y_Train.to(Device)

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = net(X_Train)

loss = criterion(outputs, torch.unsqueeze(Y_Train,dim = 1))

loss.backward()

optimizer.step()

# print statistics

Running_Loss += loss.item()

if(Epoch % 100 == 0):

File.write("%.4f\t%.4f\n" % (Epoch, Running_Loss))

if(Epoch % 2 == 0):

print('Epoch {}'.format(Epoch), "Loss: ", Running_Loss)

Running_Loss = 0.0

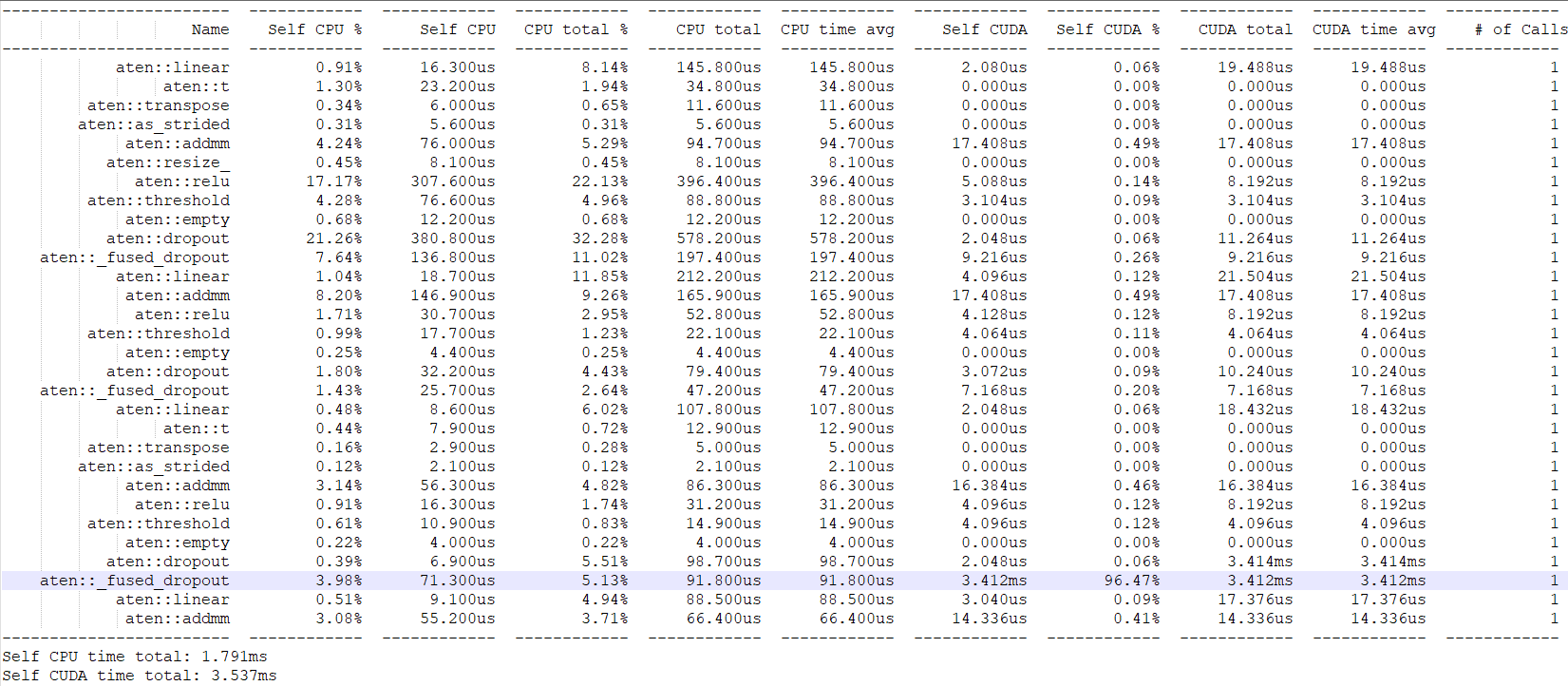

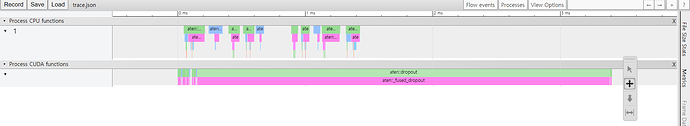

I have confirmed that the GPU is running with 50% usage. But not fast enough. Is there anything wrong in the process?

Thank you for your help.