I get the error ‘nan or inf for input tensor’ when I change SGD to RMS,Why?

Could you post the error message, please?

Do you get this error immediately after changing the optimizer?

Is the output of your model NaN or Inf?

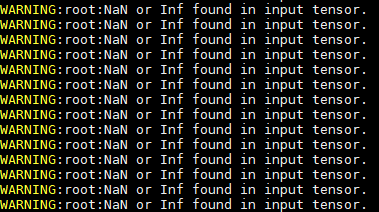

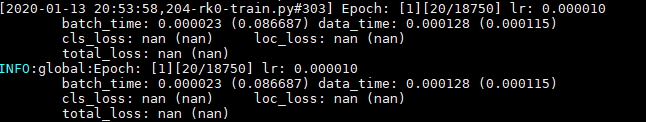

I just change the optimizer,there will be the error like this.

and the loss becomes nan.

Why?

Once the parameters become NaN, e.g. due to a high learning rate, your output will also be NaN.

Could you try to initialize the new optimizer with a smaller learning rate and retry the code again?

Yes,I have tried 0.00001 but it doesn’t work.

I try to print the gradient ,it’s sometimes very small(about 0),sometimes very large(about 100000).

Could you please tell me why?

I’m not sure, but my guess would be the internal functionality of RMSProp.

This optimizer divides the gradient by a running average of its recent magnitude.

If your gradients are quite small, since you’ve already trained your model for a few epochs, I assume this division might blow up.

Thanks a lot for your answer.