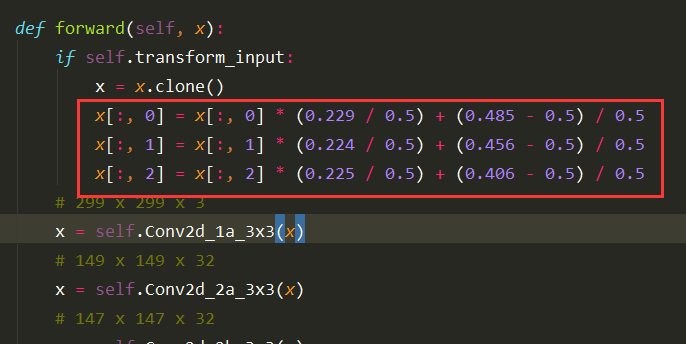

In inception v3 model, the input is transformed:

When we normalize this input , we always transform like this:input[channel] = (input[channel] - mean[channel]) / std[channel].

But what does it mean what pytorch does? Normalize?

Hoping anyone to show some his opinion.

Thanks very much.

1 Like

chenyuntc

October 16, 2017, 1:53pm

2

I guess these numbers are the average of all images from imagenet

it’s doing something of reverse-transform or unnormalize which transform the data to range(-1,1).

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

colesbury

October 17, 2017, 5:27am

3

It’s to transform inputs normalized one way into inputs normalized differently. The Inceptionv3 model uses the pre-trained weights from Google. They assume images are normalized by: (img - 0.5) / 0.5 so that inputs are between -1 and 1.

The PyTorch data loader transforms images using (roughly):

mean=[0.485, 0.456, 0.406]

std=[0.229, 0.224, 0.225]

img[c] = (img[c] - mean[c]) / std[c]

This makes the ImageNet training set have zero-mean and unit-variance.

The code in the inception model reverses the PyTorch transformation and applies the transform from Inceptionv3.

4 Likes