Hi,

I am creating an auto-encoder with batch training for a sequence prediction task. I have faced an issue which I suspect is because the backpropagation doesn’t propagate correctly through the entire network. As a result, the error doesn’t go down. Is there a way to check that backward back-propagates through which layers?

Here is how my code look like:

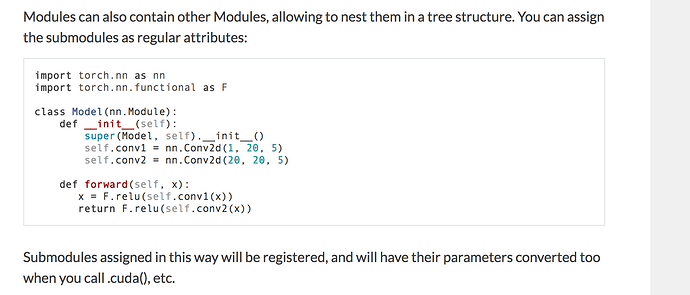

class autoencoder(nn.Module):

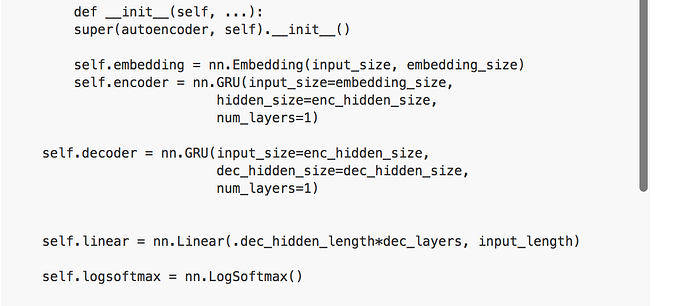

def __init__(self, ...):

super(autoencoder, self).__init__()

self.embedding = nn.Embedding(input_size, embedding_size)

self.encoder = nn.GRU(input_size=embedding_size,

hidden_size=enc_hidden_size,

num_layers=1)

self.decoder = nn.GRU(input_size=enc_hidden_size,

dec_hidden_size=dec_hidden_size,

num_layers=1)

self.linear = nn.Linear(.dec_hidden_length*dec_layers, input_length)

self.logsoftmax = nn.LogSoftmax()

def forward(input_batch, target_batch):

input = Variable(input_batch)

input = self.embedding(input)

# output of embedding is (batch_size x seq_len x embeddings_dim) but input of GRU is (seq_len x batch_size x input_size)

# so the following transpose is necessary

input = torch.transpose(input, 0, 1)

input = torch.nn.functional.relu(input)

h1 = Variable(torch.zeros(num_layers, batch_size, hidden_size))

out1, h1 = self.encoder(input, h1)

target = Variable(target_batch)

# concatenate a start_of_sentence token to the beginning of each sequence

sos = torch.from_numpy(numpy.full((batch_size, 1), index_of_sos))

# each batch is a 2d matrix where rows represent sentences

target = Variable(torch.cat((sos, target), 1))

target = self.embedding(target)

target = torch.nn.functional.relu(target)

out2, h2 = self.decoder(target, h1) # out2 is (seq_length x batch_size x hidden_length)

out2 = self.linear(out2.view(-1, out2.size(2)))

out2 = self.logsoftmax(out2)

return(out2)

Then, in another function train() I call forward with the right input arguments.

ae = autoencoder(...)

optimizer = torch.optim.SGD(ae.parameters(), lr=0.05)

criterion = torch.nn.NLLLoss()

for it in range(0, num_iterations):

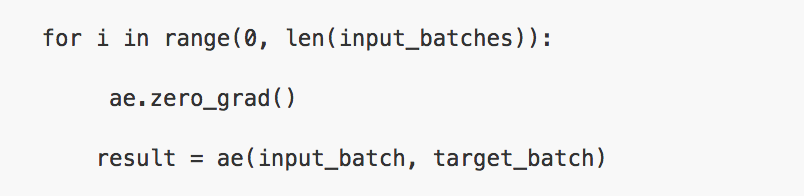

for i in range(0, len(input_batches)):

ae.zero_grad()

result = ae(input_batch, target_batch)

# concatenate an end_of_sentence token to the end of each sequence

eos = torch.from_numpy(numpy.full((batch_size, 1), index_of_eos))

tar_batch = Variable(torch.FloatTensor(torch.cat((target_batches[i], eos), 1)))

error = criterion(result, Variable(tar_batch))

error.backward()

optimizer.step()

When I train the model, the error goes down only a little bit but then stops (fluctuates) there. More specifically, the error stays quite high even if I dramatically decrease the size of the training data and increase the number of epochs. I wonder if my code correctly utilizes backward and torch.optim. I am not sure if result being returned by the autoencoder stores the entire computational graph.