I did an experiment of PyTorch tensor. The code is shown below.

import torch

t = torch.tensor([

[1,2],

[3,4]

])

t2 = torch.tensor([

[5,6],

[7,8]

])

print ('id (t[0][0]):', id (t[0][0]))

print ('id (t2[0][0]):', id (t2[0][0]))

t[0][0]=t2[0][0]

print ('t:', t)

print ('t2:', t2)

print ('id (t[0][0]):', id (t[0][0]))

print ('id (t2[0][0]):', id (t2[0][0]))

Here is the result of that.

id (t[0][0]): 1663486076824

id (t2[0][0]): 1663486077112

t: tensor([[5, 2],

[3, 4]])

t2: tensor([[5, 6],

[7, 8]])

id (t[0][0]): 1663486077184

id (t2[0][0]): 1663486077184

Why both memory addresses of t and t2 change?

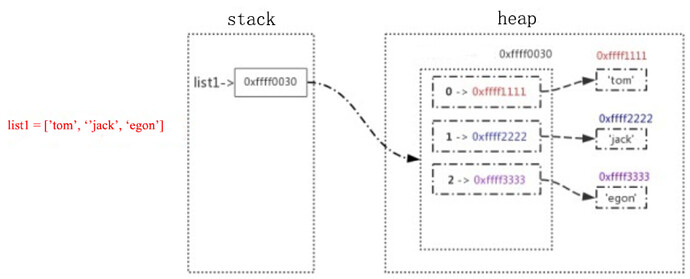

And here is the comparative experiment from list.

t = [

[1,2],

[3,4]

]

t2 = [

[5,6],

[7,8]

]

print ('id (t[0][0]):', id (t[0][0]))

print ('id (t2[0][0]):', id (t2[0][0]))

t[0][0]=t2[0][0]

print ('t:', t)

print ('t2:', t2)

print ('id (t[0][0]):', id (t[0][0]))

print ('id (t2[0][0]):', id (t2[0][0]))

The result of list:

id (t[0][0]): 1522970848

id (t2[0][0]): 1522970976

t: [[5, 2], [3, 4]]

t2: [[5, 6], [7, 8]]

id (t[0][0]): 1522970976

id (t2[0][0]): 1522970976

You can see from that the memory management of list is different from tensor. I want to know how PyTorch manages memory (e.g. reference, memory change, and assign).