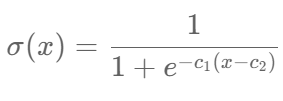

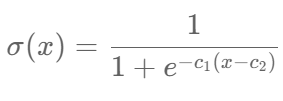

Hello, I want ask about sigmoid function and it’s general rule:

is it in pytorch always centered on zero? and the c1 is constant? or the parameters updated during training?!

Hello, I want ask about sigmoid function and it’s general rule:

is it in pytorch always centered on zero? and the c1 is constant? or the parameters updated during training?!

Hi,

As per the documentation - Sigmoid — PyTorch 2.2 documentation, the above sigmoid function is always centred on zero. So basically c1=1 and c2=0 in your equation above. There are no learnable parameters in the sigmoid implementation, it is just a non-linear transformation applied to the input.

Activations functions have not param to learn.

No, you can set a parameter for certain activation function like LeakyReLU using nn.Parametem and make the model to learn and select the best slope of the negative values.

good point!

Is there any paper that focus on learnable param of activation function?