I load my pickle file as follows;

with open(os.path.join(“.”, picklePath), “br”) as fh:

data = pickle.load(fh)

and from that I set my datasets for images and labels;

X_tr = data[‘training_data’][0]

y_tr = data[‘training_data’][1].ravel()

X_v = data[‘validation_data’][0]

y_v = data[‘validation_data’][1].ravel()

X_t = data[‘test_data’][0]

y_t = data[‘test_data’][1].ravel()

I take a view of the first image and it looks as expected, so I create this transform (note that the resize is commented out)

_transforms = transforms.Compose([ transforms.ToPILImage(), #transforms.Resize((ImageSize.width, ImageSize.height)), transforms.Grayscale(num_output_channels=1), transforms.ToTensor() ])

I then use this transform to create a custom dataset in which the labels and the images are aligned

train_dataset = EmotionDatasetTrain(X_tr, y_tr, transform=_transforms) test_dataset = EmotionDatasetTest(X_t, y_t, transform=_transforms) val_dataset = EmotionDatasetVal(X_v, y_v, transform=_transforms)

And the custom dataset is defined as follows (similar classes exists for Test and Validation)

class EmotionDataSet():

def init(self, transform):

self.transform = transform

self.classes = [“neutral”, “anger”, “contempt”, “disgust”, “fear”, “happy”, “sadness”, “surprise”]def get_x_y(self, idx, _X, _Y): if torch.is_tensor(idx): idx = idx.tolist() y = _Y[idx].item() if self.transform: x = self.transform(_X[idx].reshape(ImageSize.width, ImageSize.height)) return x, yclass EmotionDatasetTrain(EmotionDataSet, Dataset):

def __init__(self, X_Train, Y_Train, transform=None): super().__init__(transform) self.X_Train = X_Train self.Y_Train = Y_Train def __len__(self): return len(self.X_Train) def __getitem__(self, idx): x, y = super().get_x_y(idx, self.X_Train, self.Y_Train) return x, y

And I have a dataloader;

train_loader = torch.utils.data.DataLoader( train_dataset, batch_size=Settings.batchSize, num_workers=0, shuffle=True )

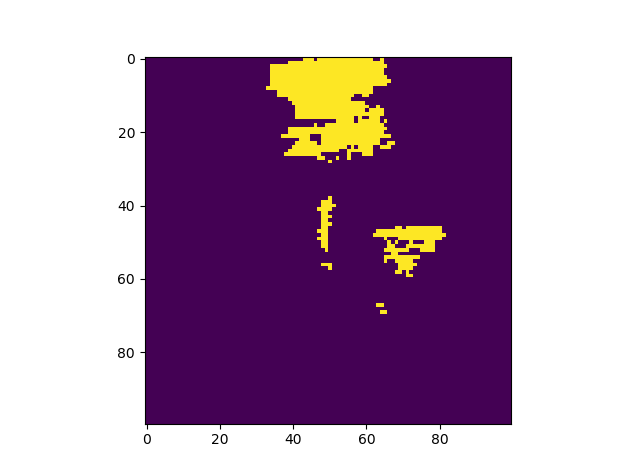

My problem is that although the images are read in correctly and can be displayed via Matlibplot, after the transform the images deteriorate considerably when viewed via Matlibplot.

The image is mostly purple with yellow outlines of the face. The resolution had gone completely, the faces were barely recognisable. When I run them through the CNN the loss function value barely changes after each epoch and given how crude the images are that is hardly surprising. I notice the following.

1/ I was hoping the Resize transform would convert an array of 1000 float numbers into a 100 * 100 matrix. It does not, so I use a reshape instead. I clearly misunderstand the resize transform.

2/ I expected the grayscale transform to turn a RGB image into a single channel grayscale image. I thought what I would see was a black and white image of the colour photo with the same resolution. Instead this was the transform - it would appear - that radically changed the image as described earlier.

I am new to all this, can someone explain what is happening and how I can fix this?