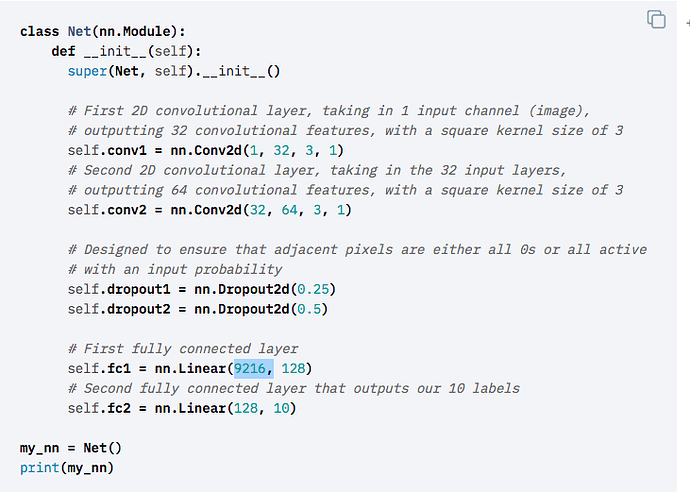

I was going through the Pytorch Recipe: Defining a Neural Network in Pytorch, and I didn’t understand what the torch.nn.Dropout2d function was doing and what its purpose was in the following algorithm in Step 2 of the recipe when it teaches us how to define and initialize the neural network.

Question 1: The comments say that the torch.nn.Dropout2d function is “Designed to ensure that adjacent pixels are either all 0s or all active with an input probability” – what “adjacent pixels” is this referring to, and what is the purpose of making them either all 0s or all active? also, what is the purpose of giving them a probability?

Question 2: Also, I don’t understand where the “9216” (i.e. the number highlighted) comes from for the first parameter in nn.Linear where self.fc1 is defined. The second convolutional layer outputted 64 features, but then the input to the first fully connected layer has 9216 features, so I don’t see the connection? I’m assuming what I’m missing is whatever the torch.nn.Dropout2d function is doing, or perhaps not, I’m not sure.

Any guidance on both of my above questions would be so appreciated, thank you!