The shape of input into LSTM is (seq_len, batch, inputsize). And in the course of deep learning, I was told that step t will utilize the information from step t - 1. But after read both the Python and C++ source code, I didn’t find the iteration on ‘time’(i.e. the step on seq_len). And it seemed that all the time step of input features are calculated in parallel. So how exactly the information is pass from time step t to step t - 1? And what is the difference between nn.LSTM and nn.LSTMCell? Does nn.LSTM is just a multi-layer version of nn.LSTMCell?

I’m so confused

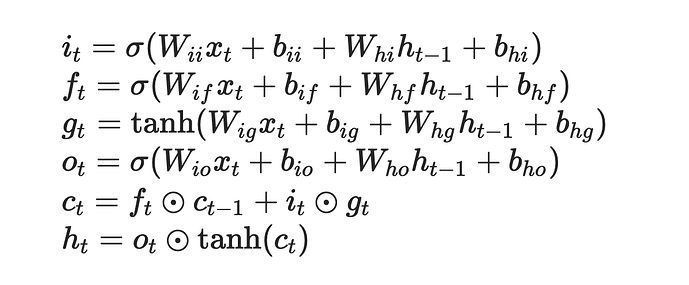

Lets take an example . If the input dimension was (batch,seq_len, inputsize). Each batch goes through the below formular in parallel with other batches. Example. If we have an input of shape (2,2,2). From left, batch=2, seqlen=2, and inputsize=2.

tensor([[[0.6451, 0.8479],

[0.3435, 0.2441]],

[[0.0185, 0.4722],

[0.8744, 0.2785]]])

[0.6451, 0.8479], = SEQ1 BATCH 1

[0.3435, 0.2441] = SEQ2 BATCH 1

[0.0185, 0.4722], SEQ1 BATCH 2

[0.8744, 0.2785]. SEQ2 BATCH 2

TIME1: SEQ1 BATCH1 Will go into the below formular AND at the same time SEQ1 BATCH2 will go in the below formular separately. So They will output two h<t>

TIME2: SEQ2 BATCH1 Will go into the below formular AND at the same time SEQ2 BATCH2 will go in the below formular BUT the h<t-1> for SEQ2 BATCH1 will be the h<t> of SEQ1 BATCH1. Similarly the h<t-1> for SEQ2 BATCH2 will be the h<t> of SEQ1 BATCH2

I hope it helps. Similar to torch.lstm is torch.rnn. You can watch my youtube video on how it works with pen and paper

torch.rnn explained with Pen and Paper

For the difference between nn.LSTM and nn.LSTMCell : nn.LSTMCell is just a single implementation of the above formular while nn.LSTM is a layer that applies nn.LSTMCell in a for loop.

Reference Pytorch LSTM vs LSTMCell

this loop exists, at the deepest level usually

This is correct, non-recurrent input-to-hidden computations can be performed separately for better performance. Results of this are later used in hidden-to-hidden computation loop.

It seems that this is the deepest layer I find, but still got no loops on time.

In pytorch/aten/src/ATen/native/RNN.cpp

template <typename cell_params>

struct LSTMCell : Cell<std::tuple<Tensor, Tensor>, cell_params> {

using hidden_type = std::tuple<Tensor, Tensor>;

hidden_type operator()(

const Tensor& input,

const hidden_type& hidden,

const cell_params& params,

bool pre_compute_input = false) const override {

const auto& hx = std::get<0>(hidden);

const auto& cx = std::get<1>(hidden);

if (input.is_cuda()) {

TORCH_CHECK(!pre_compute_input);

auto igates = params.matmul_ih(input);

auto hgates = params.matmul_hh(hx);

auto result = at::_thnn_fused_lstm_cell(

igates, hgates, cx, params.b_ih(), params.b_hh());

// applying projections if w_hr is defined

auto hy = params.matmul_hr(std::get<0>(result));

// Slice off the workspace argument (it's needed only for AD).

return std::make_tuple(std::move(hy), std::move(std::get<1>(result)));

}

const auto gates = params.linear_hh(hx).add_(

pre_compute_input ? input : params.linear_ih(input));

auto chunked_gates = gates.unsafe_chunk(4, 1);

auto ingate = chunked_gates[0].sigmoid_();

auto forgetgate = chunked_gates[1].sigmoid_();

auto cellgate = chunked_gates[2].tanh_();

auto outgate = chunked_gates[3].sigmoid_();

auto cy = (forgetgate * cx).add_(ingate * cellgate);

auto hy = outgate * cy.tanh();

hy = params.matmul_hr(hy);

return std::make_tuple(std::move(hy), std::move(cy));

}

};

However, I can find loops on lstm layers:

template<typename io_type, typename hidden_type, typename weight_type>

LayerOutput<io_type, std::vector<hidden_type>>

apply_layer_stack(const Layer<io_type, hidden_type, weight_type>& layer, const io_type& input,

const std::vector<hidden_type>& hiddens, const std::vector<weight_type>& weights,

int64_t num_layers, double dropout_p, bool train) {

TORCH_CHECK(num_layers == (int64_t)hiddens.size(), "Expected more hidden states in stacked_rnn");

TORCH_CHECK(num_layers == (int64_t)weights.size(), "Expected more weights in stacked_rnn");

auto layer_input = input;

auto hidden_it = hiddens.begin();

auto weight_it = weights.begin();

std::vector<hidden_type> final_hiddens;

for (int64_t l = 0; l < num_layers; ++l) {

auto layer_output = layer(layer_input, *(hidden_it++), *(weight_it++));

final_hiddens.push_back(layer_output.final_hidden);

layer_input = layer_output.outputs;

if (dropout_p != 0 && train && l < num_layers - 1) {

layer_input = dropout(layer_input, dropout_p);

}

}

return {layer_input, final_hiddens};

}

Still very confused.

You’re looking at a convoluted c++ implementation, where cells (i.e. 1 step code) are “functors” and the loop is higher.

In the same file, look at two FullLayer::operator() overloads, notice that inputs.unbind(0) produces a list, and there is a loop over step_inputs in other overload.