Hi there.

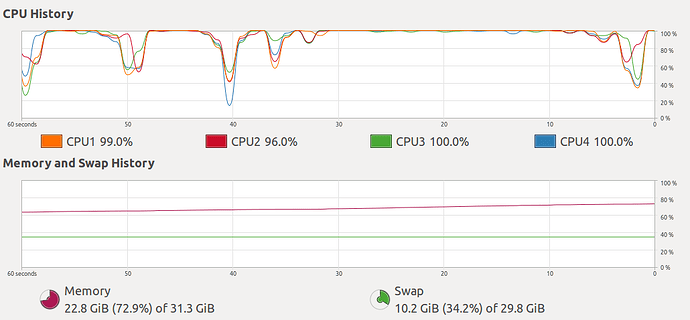

So it turns out it’s RAM issue rather than GPU memory issue. I’ve been trying to allocate where is it coming from but no lack in solving it.

I’ve ran tracemalloc just now and it gives me the following output:

10 files allocating the most memory:

<frozen importlib._bootstrap_external>;:487: size=500 KiB, count=5186, average=99 B

/home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/lib2to3/pgen2/grammar.py:108: size=345 KiB, count=5489, average=64 B

/home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/site-packages/torch/nn/functional.py:1189: size=138 KiB, count=5040, average=28 B

/home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/site-packages/torch/serialization.py:469: size=98.0 KiB, count=1105, average=91 B

/home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/site-packages/numpy/lib/arraypad.py:945: size=92.0 KiB, count=1311, average=72 B

/home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/site-packages/torch/tensor.py:33: size=84.7 KiB, count=1920, average=45 B

/home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/site-packages/torch/_utils.py:94: size=78.7 KiB, count=1007, average=80 B

/home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/site-packages/torch/tensor.py:36: size=65.8 KiB, count=702, average=96 B

/home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/site-packages/torch/serialization.py:213: size=64.4 KiB, count=687, average=96 B

/home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/lib2to3/pgen2/grammar.py:122: size=45.9 KiB, count=475, average=99 B

And, 25 frame traceback:

`5036 memory blocks: 317.3 KiB

File /home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/lib2to3/pgen2/grammar.py", line 108 d = pickle.load(f)

File "/home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/lib2to3/pgen2/driver.py", line 134 g.load(gp)

File "/home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/lib2to3/pgen2/driver.py", line 159 return load_grammar(grammar_source)

File "/home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/lib2to3/pygram.py", line 32 python_grammar = driver.load_packaged_grammar("lib2to3", _GRAMMAR_FILE)

File "<frozen importlib._bootstrap>", line 219

File "<frozen importlib._bootstrap_external>", line 678

File "<frozen importlib._bootstrap>", line 665

File "<frozen importlib._bootstrap>", line 955

File "<frozen importlib._bootstrap>", line 971

File "/home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/lib2to3/fixer_util.py", line 7 from .pygram import python_symbols as syms

File "<frozen importlib._bootstrap>", line 219

File "<frozen importlib._bootstrap_external>", line 678

File "<frozen importlib._bootstrap>", line 665

File "<frozen importlib._bootstrap>", line 955

File "<frozen importlib._bootstrap>", line 971

File "/home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/lib2to3/refactor.py", line 25 from .fixer_util import find_root

File "<frozen importlib._bootstrap>", line 219

File "<frozen importlib._bootstrap_external>", line 678

File "<frozen importlib._bootstrap>", line 665

File "<frozen importlib._bootstrap>", line 955

File "<frozen importlib._bootstrap>", line 971

File "/home/nd26/anaconda3/envs/pytorch_env/lib/python3.6/site-packages/past/translation/__init__.py", line 42 from lib2to3.refactor import RefactoringTool

File "<frozen importlib._bootstrap>", line 219

File "<frozen importlib._bootstrap_external>", line 678

File "<frozen importlib._bootstrap>", line 665`

I am quite clueless as to what should I do next. Any advice?