Hi everyone

I am currently training a image segmentation network with PyTorch evaluated with hausdorff distance loss. To calculate hausdorff loss, I am using distance_transform_edt from scipy.ndimage

associated with morpholopy.py provided by scikit-learn.

My training script works well on other platforms, including PC(Intel i5-9400F, RTX 2060, Windows 10), Server 1 (AMD Ryzen 7 2700X, RTX A4000, Fedora 33), Server 2 (AMD Ryzen 7 3700X, RTX A4000, Fedora 34).

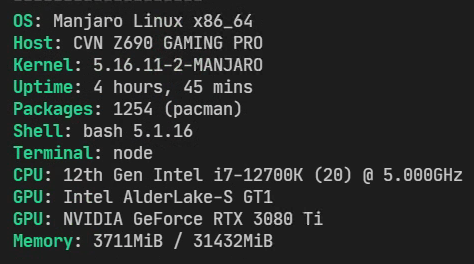

However, when I try to train my model on my linux PC (Intel i7-12700K, RTX 3080Ti, Manjaro, Linux Core: 5.16), my computer crashs several times. Mostly just training terminated with out exception, and it shows Segmentation fault related to threading.py, queues.py, morphology.py (details describe below), and sometimes it even causes linux kernel panic so I have to force reboot for getting control.

It occurs randomly, I have tried to install Ubuntu 20.04 LTS with linux kernel 5.15 or 5.16, install PyTorch Nightly version, install scikit-learn-intelex, install latest numpy with mkl, but it still happens.

No evidence of over-temperature, GPU memory overflow can be observed by utilizing sensors and nvidia-smi command.

I have noticed that on Intel 12th Alder lake some architecture have been changed to improve performance so it is suspicious.

Any idea what I can do?

Thanks in advance.

System Information

Message echoed from faulthandler

Start Validation

Epoch 32/2000: 100%|█████████████████████████████████| 99/99 [00:12<00:00, 7.67it/s, f_h=0.129, hd_h=0.0341, total_loss=0.163]

Finish Validation

Epoch:32/2000

Total Loss: 0.4968 || Val Loss: 0.0538

Epoch 33/2000: 25%|██████ | 201/800 [00:35<01:46, 5.64it/s, Total=0.13, f_h=0.106, hd_h=0.0239, s/step=0.53]Fatal Python error: Segmentation fault

Thread 0x00007fbf7ba00640 (most recent call first):

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 302 in wait

File “.conda/envs/Torch/lib/python3.8/multiprocessing/queues.py”, line 227 in _feed

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 870 in run

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 932 in _bootstrap_inner

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 890 in _bootstrapThread 0x00007fbf7a0b5640 (most recent call first):

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 302 in wait

File “.conda/envs/Torch/lib/python3.8/multiprocessing/queues.py”, line 227 in _feed

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 870 in run

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 932 in _bootstrap_inner

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 890 in _bootstrapThread 0x00007fbf79530640 (most recent call first):

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 302 in wait

File “.conda/envs/Torch/lib/python3.8/multiprocessing/queues.py”, line 227 in _feed

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 870 in run

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 932 in _bootstrap_inner

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 890 in _bootstrapThread 0x00007fbf7ac3a640 (most recent call first):

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 302 in wait

File “.conda/envs/Torch/lib/python3.8/multiprocessing/queues.py”, line 227 in _feed

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 870 in run

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 932 in _bootstrap_inner

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 890 in _bootstrapThread 0x00007fbf75fff640 (most recent call first):

〈no Python frame〉Thread 0x00007fbf7c585640 (most recent call first):

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 306 in wait

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 558 in wait

File “.conda/envs/Torch/lib/python3.8/site-packages/tqdm/_monitor.py”, line 60 in run

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 932 in _bootstrap_inner

File “.conda/envs/Torch/lib/python3.8/threading.py”, line 890 in _bootstrapCurrent thread 0x00007fc078adf380 (most recent call first):

File “.conda/envs/Torch/lib/python3.8/site-packages/scipy/ndimage/morphology.py”, line 2299 in distance_transform_edt

File “training_utils.py”, line 190 in compute_edts_forhdloss

File “training_utils.py”, line 203 in forward

File “.conda/envs/Torch/lib/python3.8/site-packages/torch/nn/modules/module.py”, line 1102 in _call_impl

File “training_script.py”, line 68 in fit_epochs

File “training_script.py”, line 296 in module

Packages in virtual environment

conda list -n Torch

packages in environment at .conda/envs/Torch:

Name Version Build Channel

_libgcc_mutex 0.1 main

_openmp_mutex 4.5 1_gnu

blas 1.0 mkl

bottleneck 1.3.2 py38heb32a55_1

brotli 1.0.9 he6710b0_2

bzip2 1.0.8 h7b6447c_0

ca-certificates 2021.10.26 h06a4308_2

certifi 2021.10.8 py38h06a4308_2

cudatoolkit 11.3.1 h2bc3f7f_2

cycler 0.11.0 pyhd3eb1b0_0

dbus 1.13.18 hb2f20db_0

expat 2.4.4 h295c915_0

ffmpeg 4.2.2 h20bf706_0

fontconfig 2.13.1 h6c09931_0

fonttools 4.25.0 pyhd3eb1b0_0

freetype 2.11.0 h70c0345_0

fvcore 0.1.5.post20220212 pypi_0 pypi

giflib 5.2.1 h7b6447c_0

glib 2.69.1 h4ff587b_1

gmp 6.2.1 h2531618_2

gnutls 3.6.15 he1e5248_0

gst-plugins-base 1.14.0 h8213a91_2

gstreamer 1.14.0 h28cd5cc_2

icu 58.2 he6710b0_3

intel-openmp 2021.4.0 h06a4308_3561

iopath 0.1.9 pypi_0 pypi

joblib 1.1.0 pyhd3eb1b0_0

jpeg 9d h7f8727e_0

kiwisolver 1.3.2 py38h295c915_0

lame 3.100 h7b6447c_0

lcms2 2.12 h3be6417_0

ld_impl_linux-64 2.35.1 h7274673_9

libffi 3.3 he6710b0_2

libgcc-ng 9.3.0 h5101ec6_17

libgfortran-ng 7.5.0 ha8ba4b0_17

libgfortran4 7.5.0 ha8ba4b0_17

libgomp 9.3.0 h5101ec6_17

libidn2 2.3.2 h7f8727e_0

libopus 1.3.1 h7b6447c_0

libpng 1.6.37 hbc83047_0

libstdcxx-ng 9.3.0 hd4cf53a_17

libtasn1 4.16.0 h27cfd23_0

libtiff 4.2.0 h85742a9_0

libunistring 0.9.10 h27cfd23_0

libuuid 1.0.3 h7f8727e_2

libuv 1.40.0 h7b6447c_0

libvpx 1.7.0 h439df22_0

libwebp 1.2.2 h55f646e_0

libwebp-base 1.2.2 h7f8727e_0

libxcb 1.14 h7b6447c_0

libxml2 2.9.12 h03d6c58_0

lz4-c 1.9.3 h295c915_1

matplotlib 3.5.1 py38h06a4308_0

matplotlib-base 3.5.1 py38ha18d171_0

mkl 2021.4.0 h06a4308_640

mkl-service 2.4.0 py38h7f8727e_0

mkl_fft 1.3.1 py38hd3c417c_0

mkl_random 1.2.2 py38h51133e4_0

munkres 1.1.4 py_0

ncurses 6.3 h7f8727e_2

nettle 3.7.3 hbbd107a_1

numexpr 2.8.1 py38h6abb31d_0

numpy 1.21.2 py38h20f2e39_0

numpy-base 1.21.2 py38h79a1101_0

olefile 0.46 pyhd3eb1b0_0

opencv-python 4.5.5.62 pypi_0 pypi

openh264 2.1.1 h4ff587b_0

openssl 1.1.1m h7f8727e_0

packaging 21.3 pyhd3eb1b0_0

pandas 1.4.1 py38h295c915_0

pcre 8.45 h295c915_0

pillow 8.4.0 py38h5aabda8_0

pip 21.2.4 py38h06a4308_0

portalocker 2.4.0 pypi_0 pypi

pyparsing 3.0.4 pyhd3eb1b0_0

pyqt 5.9.2 py38h05f1152_4

python 3.8.12 h12debd9_0

python-dateutil 2.8.2 pyhd3eb1b0_0

pytorch 1.10.2 py3.8_cuda11.3_cudnn8.2.0_0 pytorch

pytorch-mutex 1.0 cuda pytorch

pytz 2021.3 pyhd3eb1b0_0

pyyaml 6.0 pypi_0 pypi

qt 5.9.7 h5867ecd_1

readline 8.1.2 h7f8727e_1

scikit-learn 1.0.2 py38h51133e4_1

scipy 1.7.3 py38hc147768_0

setuptools 58.0.4 py38h06a4308_0

sip 4.19.13 py38h295c915_0

six 1.16.0 pyhd3eb1b0_1

sqlite 3.37.2 hc218d9a_0

tabulate 0.8.9 pypi_0 pypi

termcolor 1.1.0 pypi_0 pypi

threadpoolctl 2.2.0 pyh0d69192_0

tk 8.6.11 h1ccaba5_0

torchaudio 0.10.2 py38_cu113 pytorch

torchvision 0.11.3 py38_cu113 pytorch

tornado 6.1 py38h27cfd23_0

tqdm 4.63.0 pypi_0 pypi

typing_extensions 3.10.0.2 pyh06a4308_0

wheel 0.37.1 pyhd3eb1b0_0

x264 1!157.20191217 h7b6447c_0

xz 5.2.5 h7b6447c_0

yacs 0.1.8 pypi_0 pypi

zlib 1.2.11 h7f8727e_4

zstd 1.4.9 haebb681_0