I build three versions of the same network achitecture( i am not sure now). And trained them on the same dataset( i swear) using the same solver Adam with default hyper parameters.

The caffe and keras version works really well, but the pytorch version just doesn’t work.

the loss will go down, but rises at the end of every epoch.

This is strange. What’s wrong with my code?

time_step = 150

batch_size = 256

input_dim = 6

lstm_size1 = 100

lstm_size2 = 512

fc1_size = 512

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

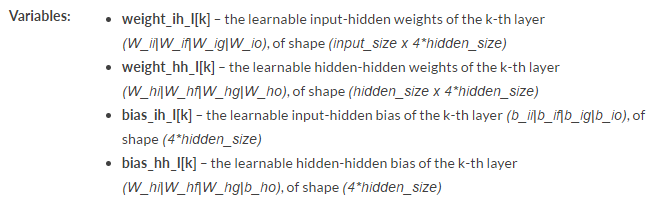

self.lstm1 = nn.LSTM(input_dim, lstm_size1, dropout=0.3)

self.lstm2 = nn.LSTM(lstm_size1, lstm_size2, dropout=0.3)

self.fc1 = nn.Linear(lstm_size2, fc1_size)

self.fc2 = nn.Linear(fc1_size, 3755)

def forward(self, x, num_strokes, batch_size):

x = torch.nn.utils.rnn.pack_padded_sequence(x,num_strokes)

hidden_cell_1 = (Variable(torch.zeros(1, batch_size, lstm_size1).cuda()),

Variable(torch.zeros(1, batch_size, lstm_size1).cuda()))

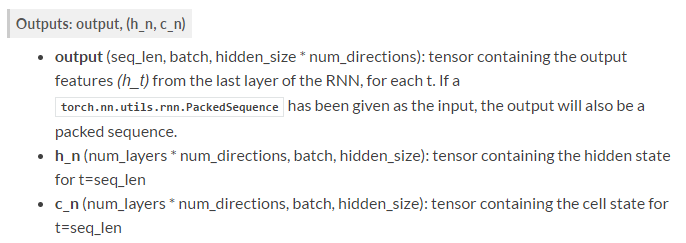

allout_1, _ = self.lstm1(x, hidden_cell_1)

hidden_cell_2 = (Variable(torch.zeros(1, batch_size, lstm_size2).cuda()),

Variable(torch.zeros(1, batch_size, lstm_size2).cuda()))

_, last_hidden_cell_out = self.lstm2(allout_1, hidden_cell_2)

last_hidden_cell_out = Variable(last_hidden_cell_out[0].data)

x = last_hidden_cell_out.view(batch_size, lstm_size2)

x = self.fc1(x)

x = F.relu(x)

x = F.dropout(x, p = 0.3)

x = self.fc2(x)

# out = F.log_softmax(x)

return x

The mainly training part:

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

# one sample is of size [150, 6], so data is of size [256, 150, 6], i need to transpose data to get T*B*L

data = torch.transpose(data, 0, 1)

data, target = Variable(data), Variable(target)

optimizer.zero_grad()

out = model(data, num_strokes, batch_size)

loss = F.cross_entropy(out, target)

loss.backward()

optimizer.step()

The key point here is that my data is padded to 150, which means one sample maybe only have a length of 35 and of size [35, 6], i padded it to [150, 6], so only 35 lines at the begining are none zero. the rest 115 lines are all zeros. So i use:

x = torch.nn.utils.rnn.pack_padded_sequence(x,num_strokes)

where x is sorted by data lengths, and num_strokes indicates their lengths in descent order.

Am i using it in the right way?

Could any help me? I really don’t know why after debug for several days