Hello ,

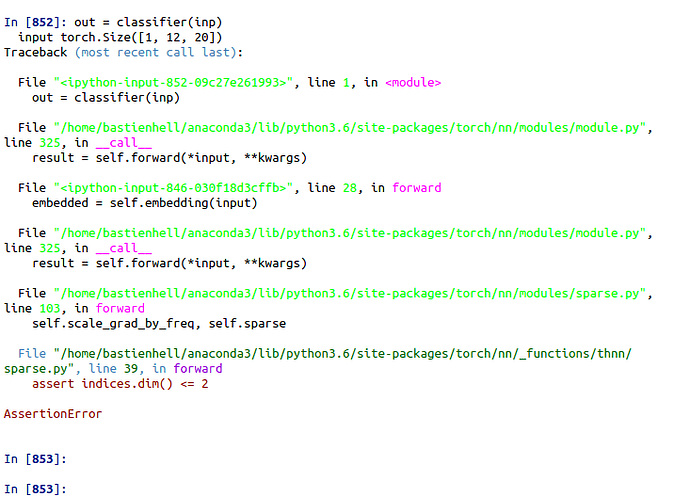

I can’t figure out this error , it seems to be linked to input dimension to my RNNclassifier.

Thanks.

class RNNClassifier(nn.Module):

def __init__(self, input_size, hidden_size, output_size, n_layers=1):

super(RNNClassifier, self).__init__()

self.hidden_size = hidden_size

self.n_layers = n_layers

self.embedding = nn.Embedding(input_size, hidden_size)

self.gru = nn.GRU(hidden_size, hidden_size, n_layers)

self.fc = nn.Linear(hidden_size, output_size)

def forward(self, input):

batch_size = input.size(0)

print(" input", input.size())

embedded = self.embedding(input)

print(" embedding", embedded.size())

hidden = self._init_hidden(batch_size)

output, hidden = self.gru(embedded, hidden)

print(" gru hidden output", hidden.size())

fc_output = self.fc(hidden)

print(" fc output", fc_output.size())

return fc_output

def _init_hidden(self, batch_size):

hidden = torch.zeros(self.n_layers, batch_size, self.hidden_size)

return Variable(hidden)

X_train, Y_train = Data('s2-gap-12dates.csv', train = True)

X=X_train[0,:,:]

X=torch.from_numpy(X)

X=X.view(-1,12,20)

inp = Variable(X)

out = classifier(inp)