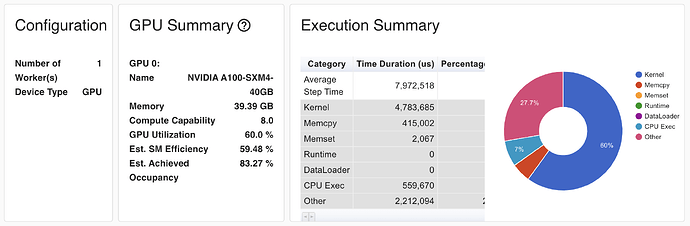

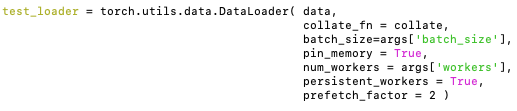

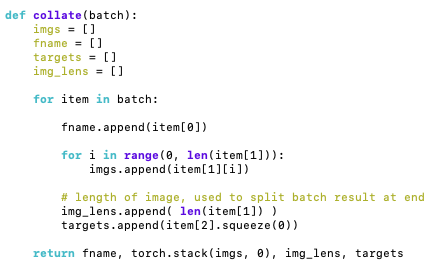

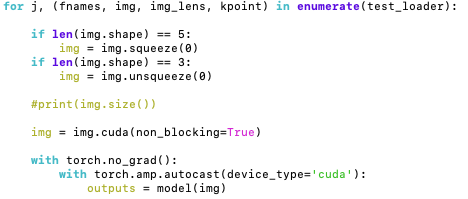

12 workers supply the GPU with data. When doing inference, the GPU is shows:

- 100% GPU utilisation

- all GPU RAM is used

HTop shows more-or-less that:

- none of the CPUs are bottlenecked.

But in between batch loading, there are moments when the GPU % drops to zero.

I suspect this is the main reason for low GPU utilisation.

Is there any way to reduce this idleness?

I have many more avenues to improve performance, but this seems blindingly obvious, but I am unsure how to improve this.

Thanks!