Hi,

I have a small model with some custom convolutional layers which. Before the actual conv happen I do some slicing and copying as following:

outputs = inputs.new_zeros(inputs.size(0), self.in_channels, self.padded_height_in, self.padded_width_in)

if self.in_feature_depth == 6:

outputs[:, :, self.padding_target_y, self.padding_target_x] = inputs[:, :, self.padding_src_y, self.padding_src_x]

outputs[:, :, self.padding_ch_up_target_y, self.padding_ch_up_target_x] = inputs[:, self.ch_shift_up, self.padding_ch_up_src_y, self.padding_ch_up_src_x]

outputs[:, :, self.padding_ch_down_target_y, self.padding_ch_down_target_x] = inputs[:, self.ch_shift_down, self.padding_ch_down_src_y, self.padding_ch_down_src_x]

else:

outputs[:, :, self.padding_target_y, self.padding_target_x] = inputs[:, :, self.padding_src_y, self.padding_src_x]

outputs.narrow(3, 1, self.padded_width_in-2).index_copy_(2, self.row_target, inputs)

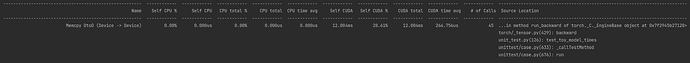

Where does the Memcpy DtoD come from and how to reduce its time? I know 12ms is not much but this is a small model with just 3 conv layers for a unit test. In the real application I am using a big model and would like to make the training faster.