Hi,

I have a problem with ReduceLROnPlateau. I initiated it using:

scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(optim, verbose=True, patience=5)

and then, after each epoch (validation), I perform

scheduler.step(loss_meter_validation.mean)

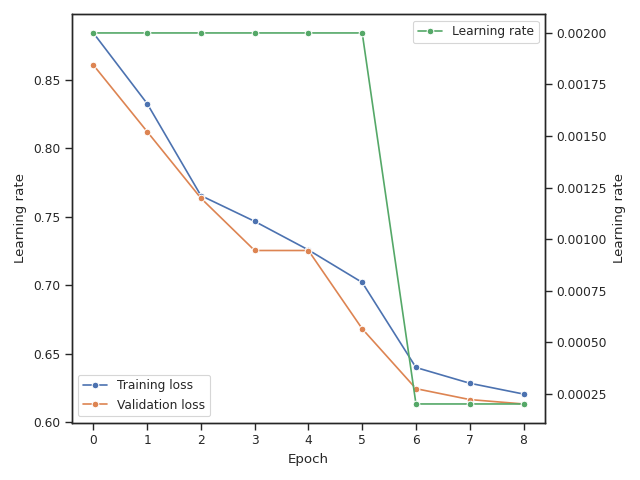

The problem is, that the scheduler decreases LR even though the loss does not plateau.

Can somebody please help me out?

Thank you very much! ![]()