Hi,

I’m trying to train an autoencoder on the hazelnut dataset of MVTec AD for reconstruction to detect anomalies.

I’m am trying to replicate the results of this study: https://arxiv.org/pdf/2008.12977.pdf

In particular, the simpler setup used in the study as reference, with features a simple AE model with no skip connection and no stain model. Just a plain 6 layer encoder and 6 layer decoder.

The encoder layers have 16-32-64-128-256-512 filters, strided conv with kernel of 5, BatchNorm, and leakuRelu activation. The decoder have the same filter sizes, and have upsampling, conv (kernel=5), batchnorm, leakyRelu and sigmoid at the final layer.

As in the paper, i’m using MSE as loss, Adam as optimizer (batch of 16) and i’m augmenting the input data with some cropping, rotations and flipping.

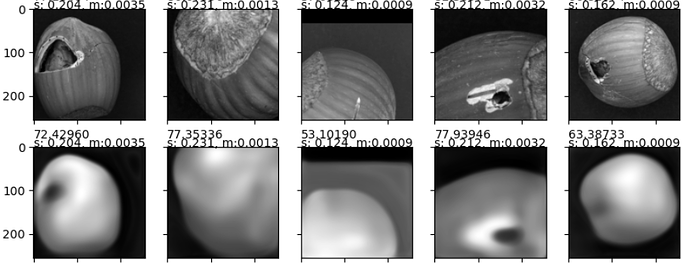

Although I feel like I have been doing what is described in the paper, my model is unable to learn any anything meaningful and only shows very rough and blurred white shadows for reconstruction:

The code and my notebook is available here : Jupyter Notebook Viewer

I don’t get what I am doing wrong here, I know that some things could improve the situation (e.g. using SSIM as loss), but I’m more interested in achieving the results of the paper ( 0.9 AUC% in the setup ). Do you have any idea what could be wrong ?