Hello!

I am trying to reproduce the result of this study. To be specific, I want to finetune DenseNet201 using the architecture and hyperparameters set in the paper.

To achieve this, I have this PyTorch code:

# Setup pretrained model with ImageNet's pretrained weights

weights = torchvision.models.DenseNet201_Weights.DEFAULT

densenet_model = torchvision.models.densenet201(weights=weights).to(device)

# Get the length of class_names (one output unit for each class)

output_shape = len(class_names)

# Modify the classifier

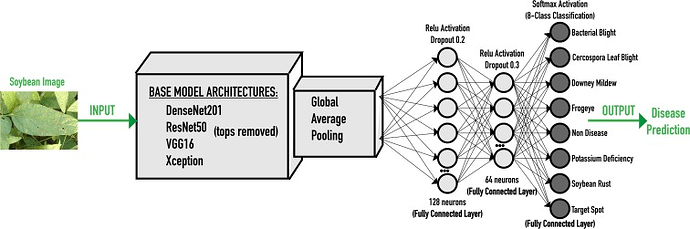

densenet_model.classifier = nn.Sequential(

nn.Linear(in_features=1920, out_features=128, bias=True),

nn.ReLU(inplace=True),

nn.Dropout(p=0.2),

nn.Linear(in_features=128, out_features=64, bias=True),

nn.ReLU(inplace=True),

nn.Dropout(p=0.3),

nn.Linear(in_features=64, out_features=output_shape, bias=True) # Number of output classes

)

# Redefine the forward pass

class CustomDenseNet201(nn.Module):

def __init__(self, base_model):

super(CustomDenseNet201, self).__init__()

self.features = base_model.features

self.pool = nn.AdaptiveAvgPool2d((1, 1))

self.flatten = nn.Flatten()

self.classifier = base_model.classifier

def forward(self, x):

x = self.features(x)

x = self.pool(x)

x = self.flatten(x)

x = self.classifier(x)

return x

# Instantiate the custom model

model = CustomDenseNet201(densenet_model)

# Move the entire model to the device

model = model.to(device)

However, the model is not learning anything (val acc: 0.15 avg). To confirm that the result is reproducible, I used a fraction of the dataset in TensorFlow and the accuracy (0.7 avg) showed that it’s learning.

# Setup pretrained model with ImageNet's pretrained weights

base_model = applications.DenseNet201(weights='imagenet', include_top=False, input_shape=(224, 224, 3))

model = models.Sequential([

base_model,

layers.GlobalAveragePooling2D(),

layers.Dense(128, activation='relu'),

layers.Dropout(0.2),

layers.Dense(64, activation='relu'),

layers.Dropout(0.3),

layers.Dense(num_classes, activation='softmax')

])

I’m sure that I am missing something or doing something wrong.