Thank you. I added ReLU to that of Torch, but it’s not changing the result. I think Torchinfo summary for DenseNet201 does not show some of the operations it performed, such as global average pooling and maybe even the final ReLU someone asked a question along this line and no concrete answer was given.

I cross-checked my data transformation layer, but I don’t think it’s responsible for the poor performance. At this point, I don’t know what else to do.

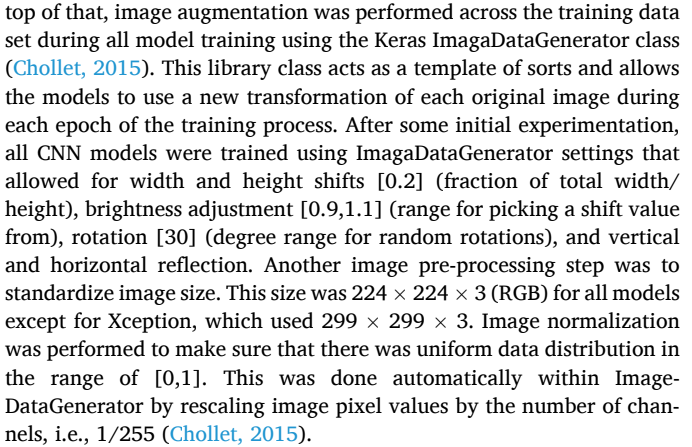

According to the authors, this is the transformation performed on the data:

And this is my replication:

mean = np.array([0.485, 0.456, 0.406])

std = np.array([0.229, 0.224, 0.225])

data_transforms = {

'train': transforms.Compose([

transforms.RandomHorizontalFlip(), # Horizontal reflection

transforms.RandomVerticalFlip(), # Vertical reflection

transforms.RandomRotation(30), # Rotation

transforms.RandomResizedCrop(224), # Rescale images and crop to size

transforms.RandomAffine(degrees=0, translate=(0.2, 0.2)), # Width and height shifts

transforms.ColorJitter(brightness=(0.9, 1.1)), # Brightness adjustment

transforms.ToTensor(), # Convert images to Tensor

transforms.Normalize(mean, std) # Normalize to [0, 1] range

]),

'val': transforms.Compose([

transforms.Resize(size=(224,224)),

transforms.ToTensor(),

transforms.Normalize(mean, std)

]),

'test': transforms.Compose([

transforms.Resize(size=(224,224)),

transforms.ToTensor(),

transforms.Normalize(mean, std)

]),

}