Hi,

I hope everyone’s doing great. Could you please give me a hand with the following problem. I believe there’s no such function in pytorch, so I am trying to implement it manually.

Context: I collect a batch of frames and push them to GPU, keep them there. Then, I have a couple of networks (YOLOs). In order for this to work, I need to resize images on GPU, to the size YOLO expects keeping aspect ratio (theres padding that fills the rest of the image with grey colour). This was done in this ticket (Resize images (torch.Tensor) already loaded on GPU keeping their aspect ratio - #9 by evgeniititov).

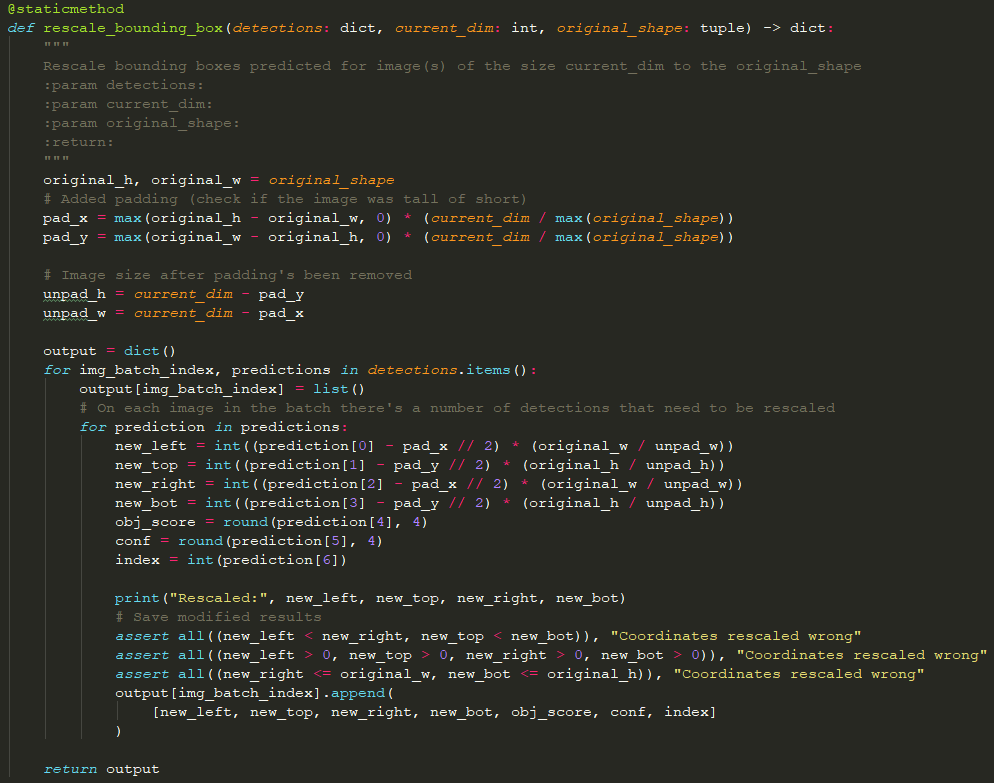

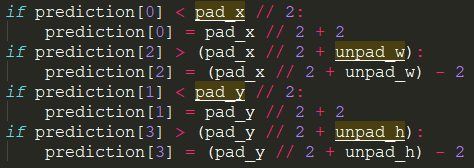

What I need to do now: resize bounding box coordinates so that they are relative to the original size of the frames, not the resized one given to the network for detection. This is how I am doing it now:

Unfortunately, it doesn’t pass the assert statements. My new_top value is usually < 0, new_right can go beyond the right edge.

detections - is a dictionary, where key - index of the image in the batch (1 for images), and values - list of lists. Each nested list is a detection predicted by the network.

If anyone could please give me a hand with it. Spent a lot of time on it, no success.

Thanks in advance.

Eugene