Hi Everyone,

I am new to PyTorch and I am working on a Nuclei Segmentation model.

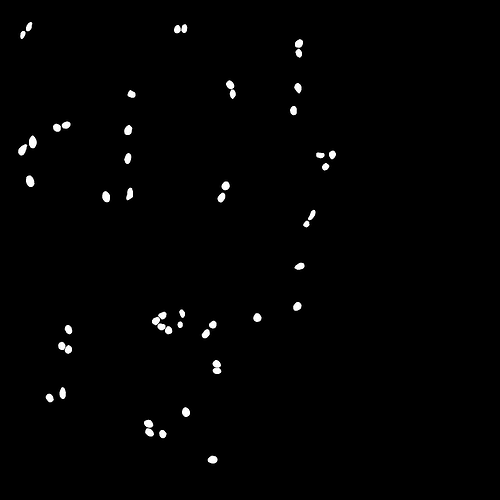

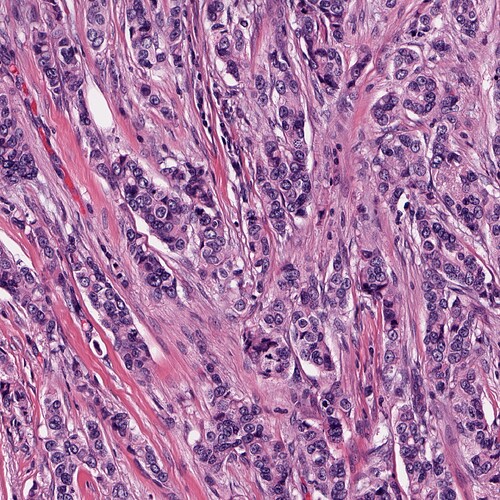

Below are the image and mask I am trying to using to train my model but my scale function cuts my mask image and gives me a black image. Hence my model doesn’t get trained properly.

Could you see if there’s something wrong with my rescale Function?

class Rescale(object):

“”“Rescale the image in a sample to a given size.

Args:

output_size (tuple or int): Desired output size. If tuple, output is

matched to output_size. If int, smaller of image edges is matched

to output_size keeping aspect ratio the same.

“””

def __init__(self, output_size, train=True):

assert isinstance(output_size, (int, tuple))

self.output_size = output_size

self.train = train

def __call__(self, sample):

if self.train:

image, mask, img_id, height, width = sample['image'], sample['mask'], sample['img_id'], sample['height'],sample['width']

if isinstance(self.output_size, int):

new_h = new_w = self.output_size

else:

new_h, new_w = self.output_size

new_h, new_w = int(new_h), int(new_w)

# resize the image,

# preserve_range means not normalize the image when resize

img = transform.resize(image, (image.shape[0] // 8 + 6, image.shape[1] // 8 + 6), anti_aliasing=True,preserve_range=True)

mask_rescaled = transform.resize(mask, (mask.shape[0] // 8 + 6, mask.shape[1] // 8 + 6), anti_aliasing=True, preserve_range=True, mode='constant')

return {'image': img, 'mask': mask_rescaled, 'img_id': img_id, 'height':height, 'width':width}

else:

image, img_id, height,width = sample['image'], sample['img_id'], sample['height'],sample['width']

if isinstance(self.output_size, int):

new_h = new_w = self.output_size

else:

new_h, new_w = self.output_size

new_h, new_w = int(new_h), int(new_w)

# resize the image,

# preserve_range means not normalize the image when resize

img = transform.resize(image, (new_h, new_w), preserve_range=True, mode='constant')

return {'image': img, 'height': height,'width':width, 'img_id':img_id}