I have 6-channel images (512x512x6) that I would like to resize while preserving the 6-channels (say to 128x128x6). torchvision.transforms.Resize expects a PIL image in input but I cannot (& do not want to) convert my images to PIL. Any idea how to do this within torchvision transforms (i.e. without resizing using numpy/scipy/cv2 or similar libs)?

Hi,

You can do it using interpolate function and it supports different methods.

Here is an example:

import PIL.Image as Image

from torchvision.transforms import ToTensor, ToPILImage

import torch.nn.functional as F

img = Image.open('data/Places365_val_00000001.jpg')

img = ToTensor()(img)

out = F.interpolate(img, size=128) #The resize operation on tensor.

ToPILImage()(out).save('test.png', mode='png')

Bests

Nik

From the docs of torch.nn.functional.interpolate:

Currently temporal, spatial and volumetric sampling are supported, i.e. expected inputs are 3-D, 4-D or 5-D in shape.

raise NotImplementedError("Input Error: Only 3D, 4D and 5D input Tensors supported" " (got {}D) for the modes: nearest | linear | bilinear | bicubic | trilinear" " (got {})".format(input.dim(), mode))

I solved by using the albumentations library (which uses at least numpy and cv2). Not exactly what I wanted, but super easy to use

Ok, great. But the interpolate function in PyTorch is very easy to use and actually needs only one line of code.

F.interpolate(tensor, size)

By the way, you should no get any error, because your tensor is 3D already so it works precisely as I shared my code.

As I can see, you provided none or something like that to interpolate because the error says your tensor has {}D dimension!

Finally, I have read a little about albumentations library and it seems it does not support GPU, so you can achieve higher benchmark by using GPU and Torch core.

Good luck

Sorry, I completely misunderstood the input.dim(), I thought it was referring to the number of channels, my bad! I did not produce the error above, I just had a look at the source. Thank you very much then. I didn’t know albumentations was not supporting GPU as well. Cheers mate

hi ,I have a 4d data and like this: torch.Size([100, 1, 2, 2]),I want to know how resize it become ([100, 1, 32, 32])?

Hi,

F.interpolate works for 3D, 4D, and 5D tensors in shape.

x = torch.randn(100, 1, 2, 2)

F.interpolate(x, (32, 32))

Hi,

I think I’m not getting what the dim means…

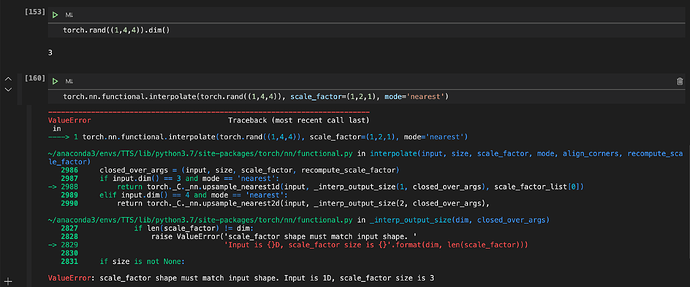

I don’t know why I’m getting this error because clearly my input has the same dim as the scale_factor tuple.

please help

Hi,

The issue is that tensor.dim does not have same meaning as dim in interpolation.

In case of interpolate, you need to provide a batched tensor if you are using scale_factor.

Your input [1, 4, 4] is actually a batch of 1 instance where it has 4 channels and only 1 dimension for samples but your scale_factor has 3 dimensions. That is why you see it says your input is 1D.

If you want to define an 4x4 grayscale image, you need to define your input in this way:

x = torch.rand((1, 1, 4, 4)) # (batch_size, channel, height, width)

res = F.interpolate(x, scale_factor=(2, 2), mode='nearest')

res.shape # [1, 1, 8. 8]

And if you want to work on a 3D image (your scale_factor is 3D at least):

x = torch.rand((1, 1, 1, 4, 4)) # (batch_size, channel, height, width, depth)

res = F.interpolate(x, scale_factor=(1, 2, 2), mode='nearest')

res.shape # torch.Size([1, 1, 1, 8, 8])

An easy solution is to always look for the source code:

def _interp_output_size(closed_over_args): # noqa: F811

input, size, scale_factor, recompute_scale_factor = closed_over_args

###############

dim = input.dim() - 2 ############# removes batch size and channel size

###############

if size is None and scale_factor is None:

raise ValueError('either size or scale_factor should be defined')

if size is not None and scale_factor is not None:

raise ValueError('only one of size or scale_factor should be defined')

if scale_factor is not None:

if isinstance(scale_factor, (list, tuple)):

if len(scale_factor) != dim:

raise ValueError('scale_factor shape must match input shape. '

'Input is {}D, scale_factor size is {}'.format(dim, len(scale_factor)))

Bests

It seems documentation has been changed so the link to the interpolate is broken.

Use the following link

https://pytorch.org/docs/stable/nn.functional.html

Thank you so much for your quick answer ! It works !

I just spent 15 minutes coding a solution with scipy.interpolate.interp2d and it’s painfully slow compared to this.

Thanks again

Hi, I am trying to use this method, however i am getting the error of

RuntimeError: It is expected output_size equals to 1, but got size 2

I am trying to standardize my torch images so that all are [5,189,197,233]

I ran it as: image = F.interpolate(image, (197,233))

What is the input image shape to interpolate in this situation?

Hmm, I cannot seem to reproduce this issue with:

>>> import torch

>>> import torch.nn.functional as F

>>> a= torch.randn([5,189,196,233])

>>> b = F.interpolate(a, (197, 233))

>>> b.shape

torch.Size([5, 189, 197, 233])

Ahh, I believe my input is without the batch size. So instead of [5,189,197,233], its actually just [189,197,233]. But the issue still persists

As per docs of interpolate, inputs expected to be batched, so with your input, torch interprets it as [batch, channel, length]. Just add a new a batch dimension by tensor.unsqueeze(0).

Hi,

I am getting “torch.Size([1, 3, 250, 100])”, so how can I update the channel from 3 to 6, any idea ??