Hello,

I am trying to build a UNET model for some fault prediction using seismic data. So, my training datasets contains 2 sets:

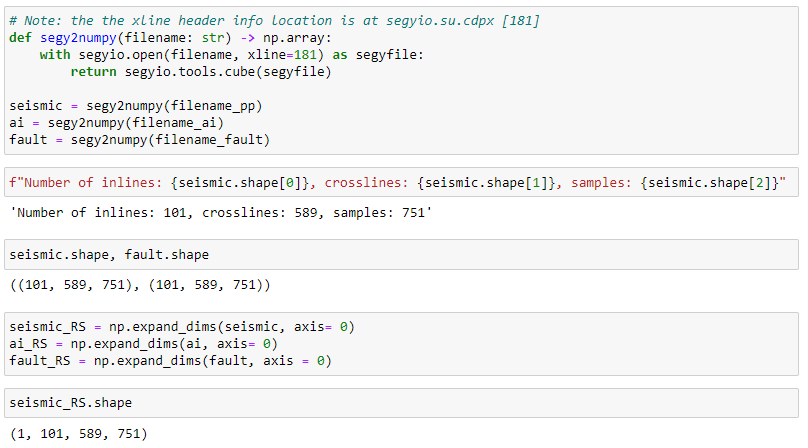

A seismic and fault mask: Both are 3D volumes with following shapes:101, 589, 751

Here, 101 is number of inlines, 589 is number of xlines

and 751 is vertical sample.

So, the idea is to treat the volume as stack of 2D arrays, in either xline or inline direction.

There is no RGB, so we treat them as single channel.

i.e, along Inline direction: shape : 589 x 751 and there is 101 inlines stacked. Or we can look into xline in similar manner.

Now, the main issue I am having is to resize this into proper sample intervals of 256, which I believe is required for U-Net.

Can anyone help me how I can achieve this with pytorch transforms.

Thanks and much appreciated.

Below is an example of way the data is loaded: