Resent

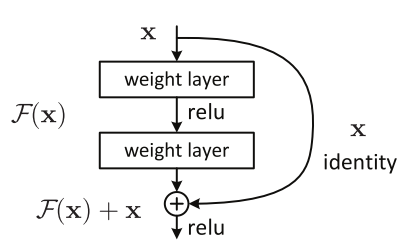

class ResNetBlock(nn.Module):

"""docstring for ResNetBlock"nn.Module"""

def __init__(self, input_channels):

super(ResNetBlock,self).__init__()

self.branch_1_3x3 = nn.Conv2d(input_channels,input_channels,bias=False,kernel_size=3,padding=1)

self.branch_1_5x5 = nn.Conv2d(input_channels,input_channels,bias=False,kernel_size=5,padding=2)

self.bn = nn.BatchNorm2d(input_channels, eps=0.001)

def forward(self, x):

x_previous = copy.deepcopy(x)

x = self.branch_1_3x3(x)

x = self.bn(x)

x = self.branch_1_5x5(x)

x = self.bn(x)

output = torch.add(x,x_previous)

return F.relu(output, inplace=True)

I’m getting this error

Traceback (most recent call last):

File “irbc1.py”, line 342, in

model_ft = train_model(net,criterion,optimizer_ft,exp_lr_scheduler,num_epochs=10)

File “irbc1.py”, line 295, in train_model

outputs = model(inputs)

File “/home/ffffff/.virtualenvs/LearnPytorch/lib/python3.6/site-packages/torch/nn/modules/module.py”, line 477, in call

result = self.forward(*input, **kwargs)

File “irbc1.py”, line 123, in forward

x = self.ResNet_1(x)

File “/home/ffffff/.virtualenvs/LearnPytorch/lib/python3.6/site-packages/torch/nn/modules/module.py”, line 477, in call

result = self.forward(*input, **kwargs)

File “irbc1.py”, line 143, in forward

x_previous = copy.deepcopy(x)

File “/home/ffffff/.virtualenvs/LearnPytorch/lib/python3.6/copy.py”, line 161, in deepcopy

y = copier(memo)

File “/home/ffffff/.virtualenvs/LearnPytorch/lib/python3.6/site-packages/torch/tensor.py”, line 15, in deepcopy

raise RuntimeError("Only Tensors created explicitly by the user "

RuntimeError: Only Tensors created explicitly by the user (graph leaves) support the deepcopy protocol at the moment

What is going wrong here?